Elon Musk’s Machine for Fascism: A Tale of Three Elections

Since the spring (when I first started writing this post), I’ve been trying to express what I think Elon Musk intended to do with his $44 billion purchase of Twitter, to turn it into a Machine for Fascism.

Ben Collins wrote a piece — which he has been working on even longer than I have on this post — that led me to return to it.

Collins returns to some texts sent to Elmo in April 2022, just before he bought Twitter, which referenced an unsigned post published at Revolver News laying out a plan for Twitter.

On the day that public records revealed that Elon Musk had become Twitter’s biggest shareholder, an unknown sender texted the billionaire and recommended an article imploring him to acquire the social network outright.

Musk’s purchase of Twitter, the 3,000-word anonymous article said, would amount to a “declaration of war against the Globalist American Empire.” The sender of the texts was offering Musk, the Tesla and SpaceX CEO, a playbook for the takeover and transformation of Twitter. As the anniversary of Musk’s purchase approaches, the identity of the sender remains unknown.

The text messages described a series of actions Musk should take after he gained full control of the social media platform: “Step 1: Blame the platform for its users; Step 2: Coordinated pressure campaign; Step 3: Exodus of the Bluechecks; Step 4: Deplatforming.”

The messages from the unknown sender were revealed in a court filing last year as evidence in a lawsuit Twitter brought against Musk after he tried to back out of buying it. The redacted documents were unearthed by The Chancery Daily, an independent legal publication covering proceedings before the Delaware Court of Chancery.

The wording of the texts matches the subtitles of the article, “The Battle of the Century: Here’s What Happens if Elon Musk Buys Twitter,” which had been published three days earlier on the right-wing website revolver.news.

Collins lays out that the post significantly predicted what has happened since, including an attack on the Anti-Defamation League.

The article on Beattie’s site begins with a baseless claim that censorship on Twitter cost President Donald Trump the 2020 election. “Free speech online is what enabled the Trump revolution in 2016,” the anonymous author wrote. “If the Internet had been as free in 2020 as it was four years before, Trump would have cruised to reelection.”

The author said that “Step 1” after a Musk takeover would be: “Blame the platform for its users.” He or she predicted that “Twitter would be blamed for every so-called act of ‘racism’ ‘sexism’ and ‘transphobia’ occurring on its platform.”

After Musk’s purchase of Twitter was finalized in October 2022, he allowed previously suspended accounts to return. Among them, he restored the account of Trump, whom Twitter had banned after the Jan. 6 Capitol insurrection, as well as the personal accounts of far-right Rep. Marjorie Taylor Greene, R-Ga., and the founder of a neo-Nazi website, Andrew Anglin.

The article predicted that “Step 2” would involve a “Coordinated pressure campaign” by the ADL and other nonprofit groups to get Musk to reinstate the banned accounts. “A vast constellation of activists and non-profits” will lurch into action to “put more and more pressure on the company to change its ways,” the article reads.

The next step, the revolver.news article predicted, would be the “Exodus of the bluechecks.” The term “bluechecks” refers to a former identity verification system on Twitter that confirmed the authenticity of the accounts of celebrities, public figures and journalists.

Musk experimented with and ultimately eliminated Twitter’s verification system of “bluechecks.” As the article predicted, the removal resulted in a public backlash and an exponential drop in advertisers and revenue. Other developments, including Musk’s drastically reducing the number of staffers who monitor tweets and a rise in hate speech, also contributed to the dynamic.

The article predicted that a final step, “Step 4,” would be the “deplatforming” of Twitter itself. He said a Musk-owned Twitter would face the same fate as Parler, a platform that presented itself as a “free speech” home for the right. After numerous calls for violence on Jan. 6 were posted on Parler, Google and Apple removed it from their app stores on the grounds that it had allowed too many posts that promoted violence, crime and misinformation.

Collins notes that the identity of the person who wrote the post on Revolver and sent the texts to Elmo has never been revealed. He seems to think it is Darren Beattie, the publisher of Revolver, whose white supremacist sympathies got him fired from Trump’s White House.

I’m not convinced the post was from Beattie. Others made a case that the person who texted Elmo was Stephen Miller (not least because there’s a redaction where his name might appear elsewhere in the court filing).

But I think Collins’ argument — that Elmo adopted a plan to use Twitter as a Machine for Fascism from the start, guided in part by that post, a post that has some tie to Russophile propagandist Beattie — persuasive.

Then again, I’ve already been thinking about the way that Elmo was trying to perfect a Machine for Fascism.

2016: Professionalizing Trolling

One thing that got me thinking about Elmo’s goals for Twitter came from reading the chatlogs from several Twitter listservs that far right trolls used to coordinate during the 2016 election, introduced as exhibits in Douglass Mackey’s trial for attempting to convince Hillary voters to text their votes rather than casting them at polling places.

The trolls believed, in real time, that their efforts were historic.

On the day Trump sealed his primary win in 2016, for example, Daily Stormer webmaster Andrew “Weev” Auernheimer boasted on a Fed Free Hate Chat that, “it’s fucking astonishing how much reach our little group here has between us, and it’ll solidify and grow after the general.” “This is where it all started,” Douglass Mackey replied, according to exhibits introduced at his trial. “We did it.”

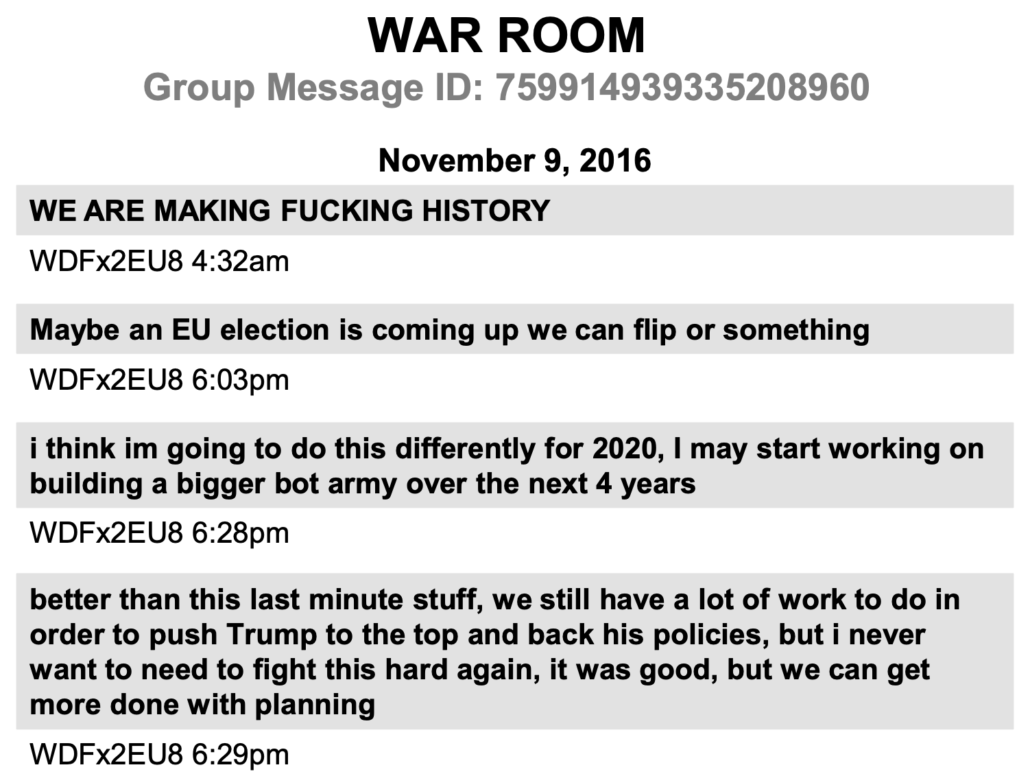

After Trump’s November win became clear, Microchip — a key part of professionalizing this effort — declared, “We are making history,” before he immediately started pitching the idea of flipping a European election (as far right trolls attempted with Emmanuel Macron’s race in 2017) and winning the 2020 election.

By that point, the trolls had been working on–and fine tuning–this effort for at least a year.

Most chilling in the back-story presented in exhibits submitted at trial is the description of how Weev almost groomed Mackey, starting in 2015. “Thanks to weev I am inproving my rhetoric. People love it,” Mackey said in the Fed Free Hate Chat in November 2015. He boasted that his “exploding” twitter account was averaging 300,000 impressions every day, before he mused, “I just hope all this shitlording goes real life.” Two days later Weev admired that, “ricky’s audience expands rapidly, he’s now a leading polemicist” [Mackey did all this under the pseudonym Ricky Vaughn].

Weev and Mackey explained their ideological goals. “The goal is to give people simple lines they can share with family or around the water cooler,” Mackey described to Bidenshairplugs in September 2015. When Weev proposed in January 2016 that he and Mackey write a guide to trolling, he described the project as “ideological disruption” and “psychological loldongs terrorism.” The Daily Stormer webmaster boasted, “i am absolutely sure we can get anyone to do or believe anything as long as we come up with the right rhetorical formula and have people actually try to apply it consistently.” And so they explained the objectives to others. “[R]eally good memes go viral,” Mackey explained to AmericanMex067 on May 10, 2016. “really really good memes become embedded in our consciousness.”

One method they used was “highjacking hashtags,” either infecting the pro-Hillary hashtags pushed by Hillary or filling anti-Trump hashtags with positive content.

Another was repetition. “repitition is key. \’Crooked Hillary created ISIS with Obama\’ repeat it again and again.” Trump hasn’t been repeating the same stupid attacks for 8 years because he’s uncreative or stupid. He’s doing it to intentionally troll America’s psyche.

A third was playing to the irrationality of people. HalleyBorderCol as she pitched the text to vote meme: people aren’t rational. a significant proportion of people who hear the rumour will NOT hear that the rumour has been debunked.”

One explicit goal was to use virality to get the mainstream press to pick up far right lines. Anthime “Baked Alaska” Gionet described that they needed some tabloid to pick up their false claims about celebrities supporting Trump. “We gotta orchestrate it so good that some shitty tabloid even picks it up.” As they were trying to get the Podesta emails to trend in October 2016, P0TUSTrump argued, “we need CNN wnd [sic] liberal news forced to cover it.”

Microchip testified to the methodology at trial.

Q What does it mean to hijack a hashtag?

A So I guess I can give you an example, is the easiest way. It’s like if you have a hashtag — back then like a Hillary Clinton hashtag called “I’m with her,” then what that would be is I would say, okay, let’s take “I’m with her” hashtag, because that’s what Hillary Clinton voters are going to be looking at, because that’s their hashtag, and then I would tweet out thousands of — of tweets of — well, for example, old videos of Hillary Clinton or Bill Clinton talking about, you know, immigration policy for back in the ’90s where they said: You know, we should shut down borders, kick out people from the USA. Anything that was disparaging of Hillary Clinton would be injected into that — into those tweets with that hashtag, so that would overflow to her voters and they’d see it and be shocked by it.

Q Is it safe to say that most of your followers were Trump supporters?

A Oh, yeah.

Q And so by hijacking, in the example you just gave a Hillary Clinton hashtag, “I am with her,” you’re getting your message out of your silo and in front of other people who might not ordinarily see it if you just posted the tweet?

A Yeah, I wanted to infect everything.

Q Was there a certain time of day that you believed tweeting would have a maximum impact?

A Yeah, so I had figured out that early morning eastern time that — well, it first started out with New York Times. I would see that they would — they would publish stories in the morning, so the people could catch that when they woke up. And some of the stories were absolutely ridiculous — sorry. Some of the stories were absolutely ridiculous that they would post that, you know, had really no relevance to what was going on in the world, but they would still end up on trending hashtags, right? And so, I thought about that and thought, you know, is there a way that I could do the same thing.

And so what I would do is before the New York Times would publish their — their information, I would spend the very early morning or evening seeding information into random hashtags, or a hashtag we created, so that by the time the morning came around, we had already had thousands of tweets in that tag that people would see because there wasn’t much activity on Twitter, so you could easily create a hashtag that would end up on the trending list by the time morning came around.

In the 2016 election, this methodology served to take memes directly from the Daily Stormer, launder them through 4Chan, then use Twitter to inject them into mainstream discourse. That’s the methodology the far right still uses, including Trump when he baits people to make his Truth Social tweets go viral on Twitter. Use Twitter to break out of far right silos and into those of Hillary supporters to recodify meaning, and ensure it all goes viral so lazy reporters at traditional outlets republish it for free, using such tweets to supplant rational discussion of other news.

And as Microchip testified, in trolling meaning and rational arguments don’t matter. Controversy does.

Q What was it about Podesta’s emails that you were sharing?

A That’s a good question.

So Podesta ‘s emails didn’t, in my opinion, have anything in particularly weird or strange about them, but my talent is to make things weird and strange so that there is a controversy. So I would take those emails and spin off other stories about the emails for the sole purpose of disparaging Hillary Clinton.

T[y]ing John Podesta to those emails, coming up with stories that had nothing to do with the emails but, you know, maybe had something to do with conspiracies of the day, and then his reputation would bleed over to Hillary Clinton, and then, because he was working for a campaign, Hillary Clinton would be disparaged.

Q So you’re essentially creating the appearance of some controversy or conspiracy associated with his emails and sharing that far and wide.

A That’s right.

Q Did you believe that what you were tweeting was true?

A No, and I didn’t care.

Q Did you fact-check any of it?

A No.

Q And so what was the ultimate purpose of that? What was your goal?

A To cause as much chaos as possible so that that would bleed over to Hillary Clinton and diminish her chance of winning.

The far right is still using this methodology to make the corrupt but not exceptional behavior of Hunter Biden into a topic that convinces half the electorate that Joe Biden is as corrupt as Donald Trump. They’ve used this methodology to get the vast majority of media outlets to chase Hunter Biden’s dick pics like six year old chasing soccer balls.

Back in 2016, the trolls had a good sense of how their efforts helped to support Trump’s electoral goals. In April 2016, for example, Baked Alaska pitched peeling off about a quarter of Bernie Sanders’ votes. “Imagine if we got even 25% of bernie supporters to ragevote for trump.” On November 2, 2016, the same day he posted the meme that got him prosecuted, Mackey explained that the key to winning PA was “to drive up turnout with non-college whites, and limit black turnout.” One user, 1080p, seemed to have special skills — if not sources — to adopt the look and feel of both campaigns.

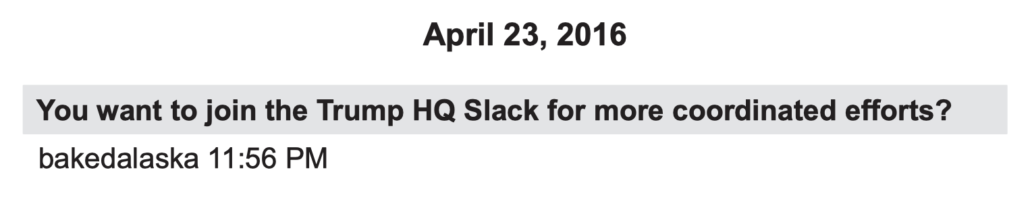

And this effort worked in close parallel to Trump’s efforts. As early as April, Baked Alaska invited Mackey to join a campaign slack “for more coordinated efforts.”

And there are several participants in the troll chatrooms whose actions or efforts to shield their true identities suggest they may be closely coordinating efforts as well.

Even in the unfettered world of 2016, Twitter’s anemic efforts to limit the trolls’ manipulation of Twitter was a common point of discussion.

For example, as the trolls were trying to get Podesta’s emails trending, HalleyBorderCol complained, “we haven’t been able to get anything to trend for aaaages … unless they changed their algorithms, they must be watching what we’re doing.” Later in October as they were launching two of their last meme campaigns, ImmigrationX complained,”I see Jack in full force today suppressing hashtags.”

Both Mackey and Microchip were banned multiple times. “Microchip get banned again??” was a common refrain. “glad to be back,” Microchip claimed on September 24. “they just banned me two times in 3 mins.” He warned others to follow-back slowly to evade an auto-detect for newly created accounts. “some folks are being banned right now, apparently, so if I’m banned for some reason, I’ll be right back,” Microchip warned on October 30. “Be good till nov 9th brother! We need your ass!” another troll said on the day Mackey was banned; at the time Microchip was trending better than Trump himself. Mackey’s third ban in this period, in response to the tweets a jury has now deemed to be criminal, came with involvement from Jack Dorsey personally.

Both testified at trial about the techniques they used to thwart the bans (including using a gifted account to return quickly, in Mackey’s case). Microchip built banning, and bot-based restoration and magnification, into his automation process.

2020: Insurrection

The far right trolls succeeded in helping Donald Trump hijack American consciousness in 2016 to get elected.

By the time the trolls — some of whom moved into far more powerful positions with Trump’s election — tried again in 2020, the social media companies had put far more controls on the kinds of viral disinformation that trolls had used with such success in 2016.

As Yoel Roth explained during this year’s Twitter hearing, the social media companies expanded their moderation efforts with the support of a bipartisan consensus formulated in response to Russia’s (far less successful than the far right troll efforts) 2016 interference efforts.

Rep. Shontel Brown

So Mr. Roth, in a recent interview you stated, and I quote, beginning in 2017, every platform Twitter included, started to invest really heavily in building out an election integrity function. So I ask, were those investments driven in part by bipartisan concerns raised by Congress and the US government after the Russian influence operation in the 2016 presidential election?

Yoel Roth:

Thank you for the question. Yes. Those concerns were fundamentally bipartisan. The Senate’s investigation of Russian active measures was a bipartisan effort. The report was bipartisan, and I think we all share concerns with what Russia is doing to meddle in our elections.

But in advance of the election, Trump ratcheted up his attacks on moderation, personalizing that with a bullying attack on Roth himself.

In the spring of 2020, after years of internal debate, my team decided that Twitter should apply a label to a tweet of then-President Trump’s that asserted that voting by mail is fraud-prone, and that the coming election would be “rigged.” “Get the facts about mail-in ballots,” the label read.

On May 27, the morning after the label went up, the White House senior adviser Kellyanne Conway publicly identified me as the head of Twitter’s site integrity team. The next day, The New York Post put several of my tweets making fun of Mr. Trump and other Republicans on its cover. I had posted them years earlier, when I was a student and had a tiny social media following of mostly my friends and family. Now, they were front-page news. Later that day, Mr. Trump tweeted that I was a “hater.”

Legions of Twitter users, most of whom days prior had no idea who I was or what my job entailed, began a campaign of online harassment that lasted months, calling for me to be fired, jailed or killed. The volume of Twitter notifications crashed my phone. Friends I hadn’t heard from in years expressed their concern. On Instagram, old vacation photos and pictures of my dog were flooded with threatening comments and insults.

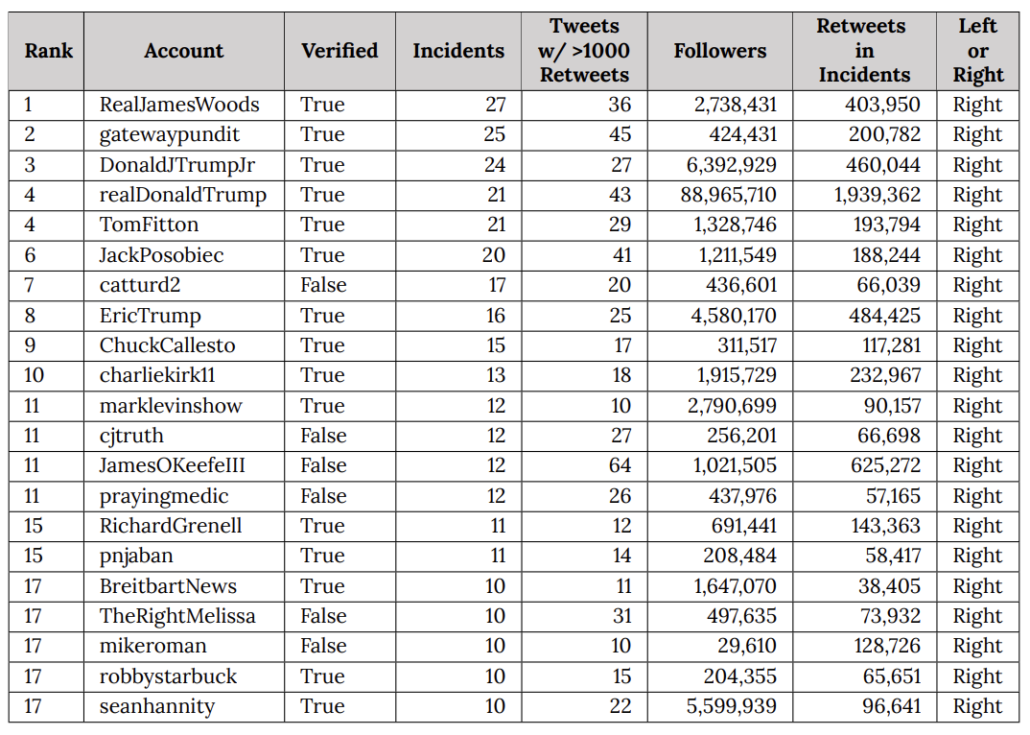

In reality, though, efforts to moderate disinformation did little to diminish the import of social media to right wing political efforts. During the election, the most effective trolls were mostly overt top associates of Donald Trump, or Trump himself, as this table I keep posting shows.

The table, which appears in a Stanford University’s Election Integrity Project report on the election, does not reflect use of disinformation (as the far right complains when they see it). Rather, it measures efficacy. Of a set of false narratives — some good faith mistakes, some intentional propaganda — that circulated on Twitter in advance of the election, this table shows who disseminated the false narratives that achieved the most reach. The false narratives disseminated most broadly were disseminated by Donald Trump, his two adult sons, Tom Fitton, Jack Posobiec, Gateway Pundit, Charlie Kirk, and Catturd. The least recognized name on this list, Mike Roman, was among the 19 people indicted by Fani Willis for efforts to steal the election in Georgia. Trump’s Acting Director of National Intelligence, Ric Grenell, even got into the game (which is unsurprising, given that before he was made Ambassador to Germany, he was mostly just a far right troll).

This is a measure of how central social media was to Trump’s efforts to discredit, both before and after the election, the well-run election that he lost.

The far right also likes to claim (nonsensically, on its face, because these numbers reflect measurements taken after the election) that these narratives were censored. At most, and in significant part because Twitter refused to apply its own rules about disinformation to high profile accounts including but not limited to Trump, this disinformation was labeled.

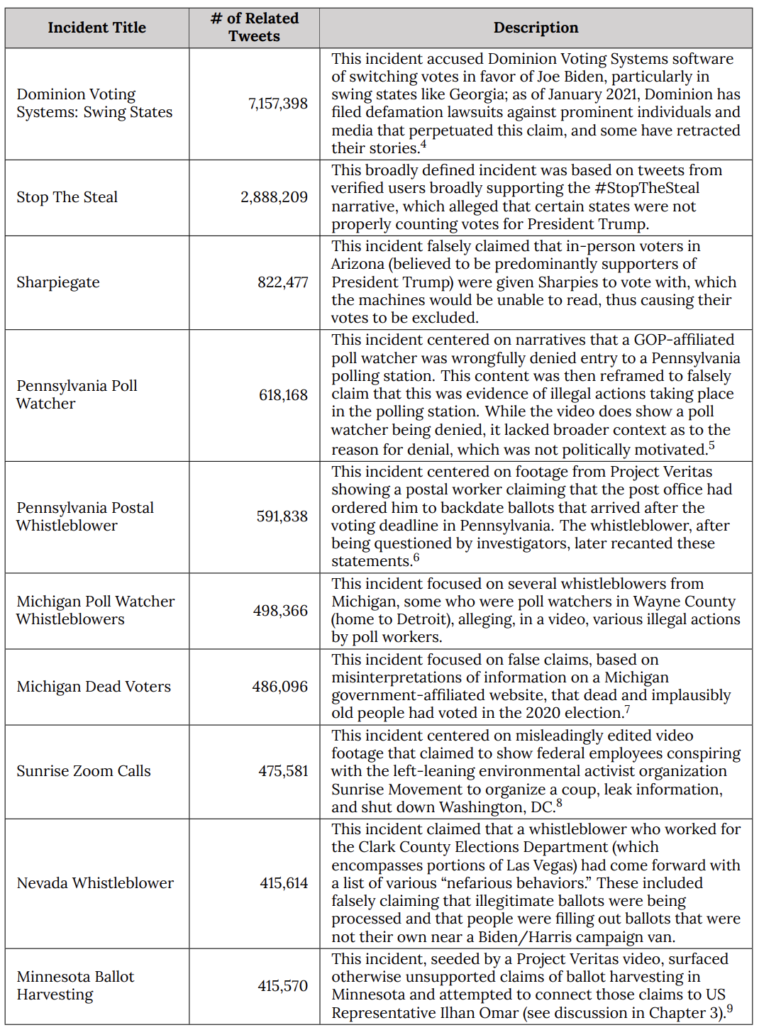

As the Draft January 6 Social Media Report described, they had some success at labeling disinformation, albeit with millions of impressions before Twitter could slap on a label.

Twitter’s response to violent rhetoric is the most relevant affect it had on January 6th, but the company’s larger civic integrity efforts relied heavily on labeling and downranking. In June of 2019, Twitter announced that it would label tweets from world leaders that violate its policies “but are in the public interest” with an “interstitial,” or a click-through warning users must bypass before viewing the content.71 In October of 2020, the company introduced an emergency form of this interstitial for high-profile tweets in violation of its civic integrity policy.” According to information provided by Twitter, the company applied this interstitial to 456 tweets between October 27″ and November 7″, when the election was called for then-President-Elect Joe Biden. After the election was called, Twitter stopped applying this interstitial.”* From the information provided by Twitter, it appears these interstitials had a measurable effect on exposure to harmful content—but that effect ceased in the crucial weeks before January 6th.

The speed with which Twitter labels a tweet obviously impacts how many users see the unlabeled (mis)information and how many see the label. For PIIs applied to high-profile violations of the civic integrity policy, about 45% of the 456 labeled tweets were treated within an hour of publication, and half the impressions on those tweets occurred after Twitter applied the interstitial. This number rose to more than eighty percent during election week, when staffing resources for civic issues were at their highest; after the election, staff were reassigned to broader enforcement work.” In answers to Select Committee questions during a briefing on the company’s civic integrity policy, Twitter staff estimates that PIIs prevented more than 304 million impressions on violative content. But at an 80% success rate, this still leaves millions of impressions.

But this labeling effort stopped after the election.

According to unreliable testimony from Brandon Straka the Stop the Steal effort started on Twitter. According to equally unreliable testimony from Ali Alexander, he primarily used Twitter to publicize and fundraise for the effort.

It was, per the Election Integrity Project, the second most successful disinformation after the Dominion propaganda.

And the January 6 Social Media Report describes that STS grew organically on Facebook after being launched on Twitter, with Facebook playing a losing game of whack-a-mole against new STS groups.

But as Alexander described, after Trump started promoting the effort on December 19, the role he would place became much easier.

Twitter wasn’t the only thing that brought a mob of people to DC and inspired many to attack the Capitol. There were right wing social media sites that may have been more important for organizing. But Twitter was an irreplaceable part of what happened.

The lesson of the 2020 election and January 6, if you care about democracy, is that Twitter and other social media companies never did enough moderation of violent speech and disinformation, and halted much of what they were doing after the election, laying the ground work for January 6.

The lesson of the 2020 election for trolls is that inadequate efforts to moderate disinformation during the election — including the Hunter Biden “laptop” operation — prevented Trump from pulling off a repeat of 2016. The lesson of January 6, for far right trolls, is that unfettered exploitation of social media might allow them to pull off a violent coup.

That’s the critical background leading up to Elmo’s purchase of Twitter.

2024: Boosting Nazis

The first thing Elmo did after purchasing Twitter was to let the far right back on.

More recently, he has started paying them money that ads don’t cover to subsidize their propaganda.

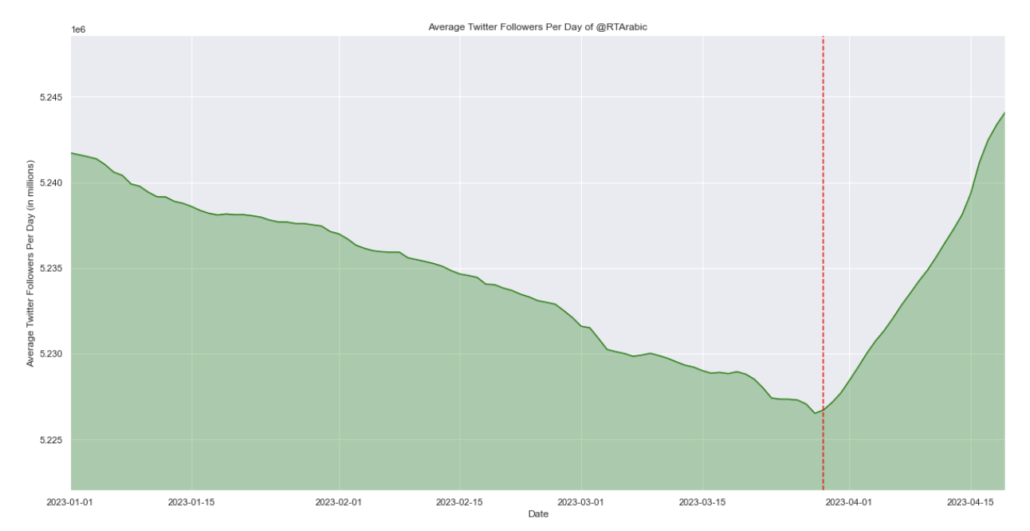

The second thing he did, with the Twitter Files, was to sow false claims about the effect and value of the moderation put into place in the wake of 2016 — an effort Republicans in Congress subsequently joined. The third thing Elmo did was to ratchet up the cost for the API, thereby making visibility into how Twitter works asymmetric, available to rich corporations and (reportedly) his Saudi investors, but newly unavailable to academic researchers working transparently. He has also reversed throttling for state-owned media, resulting in an immediate increase in propaganda.

He has done that while making it easier for authoritarian countries to take down content.

Elmo attempted, unsuccessfully, to monetize the site in ways that would insulate it from concerns about far right views or violence.

For months, Elmo, his favored trolls, and Republicans in Congress have demonized the work of NGOs that make the exploitation of Twitter by the far right visible. More recently, Elmo has started suing them, raising the cost of tracking fascism on Twitter yet more.

Roth recently wrote a NYT column that, in addition to describing the serial, dangerous bullying — first from Trump, then from Elmo — that this pressure campaign includes, laid out the stakes.

Bit by bit, hearing by hearing, these campaigns are systematically eroding hard-won improvements in the safety and integrity of online platforms — with the individuals doing this work bearing the most direct costs.

Tech platforms are retreating from their efforts to protect election security and slow the spread of online disinformation. Amid a broader climate of belt-tightening, companies have pulled back especially hard on their trust and safety efforts. As they face mounting pressure from a hostile Congress, these choices are as rational as they are dangerous.

In 2016, far right trolls helped to give Donald Trump the presidency. In 2020, their efforts to do again were thwarted — barely — by attempts to limit the impact of disinformation and violence.

But in advance of 2024, Elmo has reversed all that. Xitter has preferentially valued far right speech, starting with Elmo’s increasingly radicalized rants. More importantly, Xitter has preferentially valued speech that totally undercuts rational thought.

Elmo has made Xitter a Machine for irrational far right hate speech.

The one thing that may save us is that this Machine for Fascism has destroyed Xitter’s core value to aspiring fascists: it has destroyed Xitter’s role as a public square, from which normal people might find valuable news. In the process, Elmo has destroyed Twitter’s key role in bridging from the far right to mainstream readers.

But it’s not for lack of trying to make Xitter a Machine for Fascism.