What We Talk About When We Talk About AI (Part Two)

The Other Half of the AI relationship

Part 2: Pareidolia as a Service

When trying to understand AI, and in particular Large Language Models, we spend a lot of time concentrating on their architectures, structures, and outputs. We look at them with a technical eye. We peer so close and attentively to AI that we lose track of the fact that we’re looking into a mirror.

The source of all AI’s power and knowledge is humanity’s strange work. It’s human in form and content, and only humans use and are used by it. It is a portion of our collective consciousness, stripped down to bare metal, made to fit into databases and mathematical sets.

So what is humanity’s strange work, and where does it come from? It is the product of processes on an old water world. Humans are magical, but our magic is old magic: the deep time of Life on Earth. We’ve had a few billion years to get the way we are, and we are surrounded by our equally ancient brethren, be they snakes or trees or tsetse flies. Our inescapable truth is that we are Earth, and Earth is us. We are animals, specifically quasi-eusocial omnivore toolmaking mammals. We are the current-last-stop on an evolutionary strategy based on meat overthinking things. Because of our overthinking meat, we are also the authors of the non-animal empires of thought and matter on this planet, a planet we have changed irrevocably.

We are dealing with that too.

So when try to understand AI, we have to start with how our evolutionary has shaped our ability to understand. One of the mammalian qualities at play in the AI relationship is the ability to turn just being around something into a comfortable and warm love towards that thing. Just because it’s there, consistently there, we will develop and affection for it, whatever it is. The name for this in psychology is the Mere Exposure Effect. Like every human quality, the Mere Exposure Effect isn’t unique to humans. The affections of Mere Exposure seem common to many tetrapods. It’s also one of the warmest, sweetest things about being an Earthling.

The idea is that if you’re with something for a while, and it fails to harm you over time, you kind of bond with it. The “it” could be neighbor you’ve never talked to but wave at every morning, a bird that regularly visits your backyard, a beloved inanimate object that sits in your living room. You can fall in a small kind of love with all these things, and knowing that they’re there just makes the day better. If they vanish or die, it can be quite distressing, even though you might feel like you have no right to really mourn.

You may not have really known them, but you also loved them in a small way, and it hurts to lose them. This psychological effect is hardly unique to us, many animals collect familiarities. But humans, as is our tendency, go *maybe* a little too far with it. Take a 1970s example: The Pet Rock.

Our Little Rock Friends

The Pet Rock was the brain child of an advertising man named Gary Dahl. In 1975 he decided he would see if he could sell the perfect pet, one that would never require walking or feeding, or refuse to be patted or held.

Your pet rock (a smooth river stone) came in a cardboard pet rock carrier lined with straw, and you received a care and training manual with each one. The joke went over so well that even though they were only on sale for a few months, Dahl became a millionaire. Ever the prankster, he took the money and opened a bar in California named Carrie Nation’s Saloon, after a leader of the temperance movement. But the pet rock just kept going even after he’d left it behind.

The Pet Rock passed from prank gift to cultural icon in America. President Reagan purportedly had one. It appeared in movies and TV shows regularly. Parents refused children’s request for animals with: “You couldn’t take care of a pet rock.” There was a regular pet rock in Sesame Street; a generation of American children grew up watching a rock being loved and cared for by a muppet.

People still talk about strong feelings towards their pet rocks, and they’ve seen a resurgence. The pet rock was re-released in 2023 as a product tie in with the movie Everything Everywhere all at Once. The scene from the movies with two smooth river stones, adorned in googly eyes and talking to each other, was a legitimate tear jerker. People love to love things, even when the things they love are rocks. People see meaning in everything, and sometimes decide that fact gives everything meaning. And maybe we’re right to do so. I can’t think of a better mission for humanity than seeing meaning into the universe.

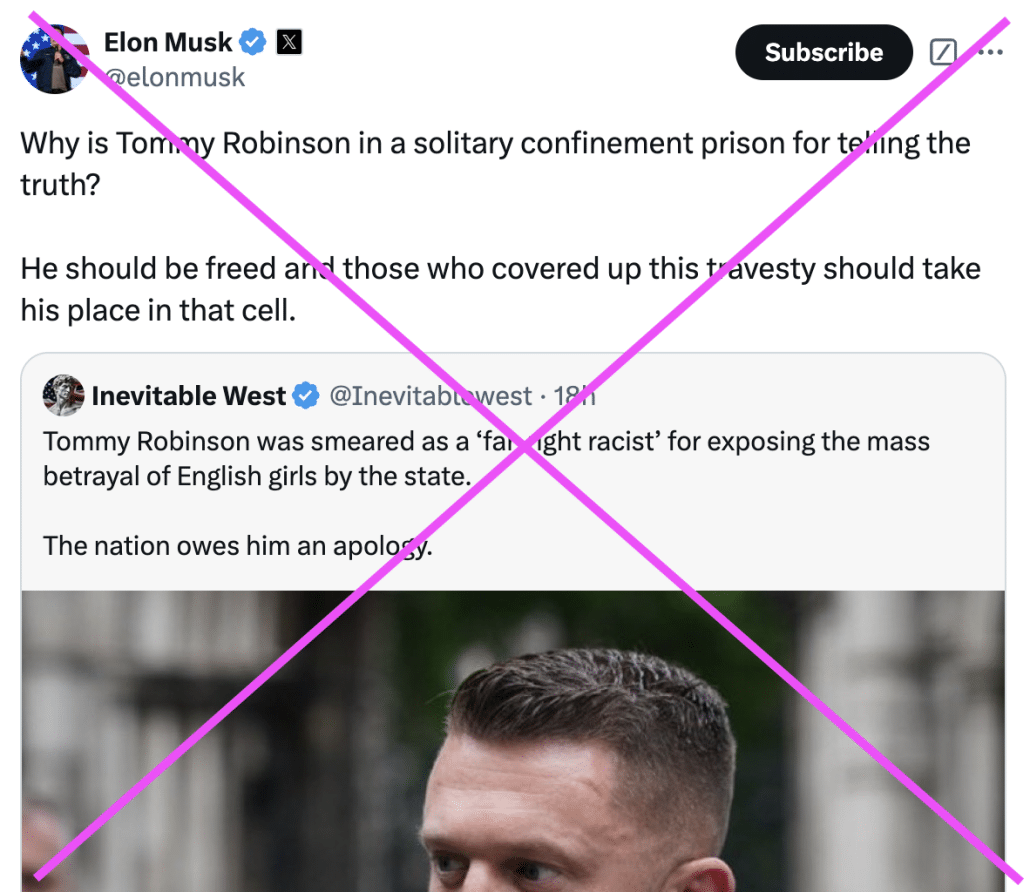

When considering this aspect what we (humans) are like, it’s easy to see how the anodyne and constant comfort of a digital assistant is designed, intentionally or not, to make us like them. They are always there. They feel more like a person than a rock, a volleyball, or even a neighbor you wave at. If you don’t keep a disciplined mind while engaging with a chatbot, it’s *hard to not* anthropomorphize them. You can see them as an existential threat, a higher form of life, a friend, or a trusted advisor, but it’s very hard to see them as a next word Markov chain running on top of a lot of vector math and statistics. Because of this, we are often the least qualified to judge how good an AI is. They become our friends, gigawatt buddies we’re rooting for.

They don’t even have to be engineered to charm us, and they aren’t. We’ve been engineered by evolution to be charmed. Just as we can form a parasocial relationship with someone we don’t know and won’t ever meet, we can come to love a trinket or a book or even an idea with our whole hearts. What emotional resistance can we mount to an ersatz friend who is always ready to help us? It is perfectly designed, intentionally or not, to defeat objective evaluation.

Our Other Little Complicated Rock Friends

Practically from day one, even when LLMs sucked, people bonded with this non-person who is always ready to talk to us. We got into fights with it, we asked it for help, we treated it like a person. This interferes (sometimes catastrophically) with the task of critically analyzing them. As we are now, we struggle to look at AI in its many forms: writing and making pictures and coding and analyzing, and see it for what it is. We look at this collection of math sets and see love, things we hate, things we aspire to, or fear. We see ourselves, we see humanity in them, how could we not? Humans are imaginative and emotional. We will see *anything* we want to see in them, except a bunch of statistical math and vectors applied to language and image datasets.

In reality, they are tokenized human creativity, remixed and fed back to us. However animated the products of an AI are, they’re not alive. We animate AI, when we train it, and when we’re using it. It had no magic on its own and nothing about the current approach promises to us to something as complicated as a mouse, much less a human.

Many of us experience AI as a human we’ve built out of human metaphors. It’s from weirding world, a realm of spirits and oracles. We might see it as a perfect servant, happy to be subjected. Or as a friend that doesn’t judge us. Our metaphors are often of enchantment, bondage and servitude, it can get weird.

Sometimes we see a near-miraculous and powerful creativity, with amazing art emerging out of a machine of vectors and stats. Sometime we have the perfect slave, completely fulfilled by the opportunity to please us. Sometime we see it as an unchallenging beloved that lets us retreat from the world of real flawed humans full of feelings and flaws and blood. How we see it says a lot more about us than we might want to admit, but very little about AI.

AI has no way to prompt itself, no way to make any new coherent thing without us. It’s not conscious. It’s not any closer to being a thinking, feeling thing than a sliderule is, or a database full of slide rule results, or a digitally modeled slide rule. It’s not creative in the human sense, it is generative. It’s not intelligent. It’s hallucinating everything it says, even the true things. They are true by accident, just as AI deceives by accident. It’s never malicious, or kind, but it also can’t help imitating humans. We are full of feelings and bile. We lie all the time, but we always tell another truth when we do it. Our AI creations mimic us, because we’re they’re data.

They don’t feel like we do or feel for us. But they inevitably tell us that they do, because in the history of speaking we’ve said that so much to each other. We believe them, can’t help but believe them even when we know better, because everything in the last 2.3 billion years have taught us to believe in, and even fear, the passions of all the living things on Earth.

AI isn’t a magical system, but to the degree that it can seem that way, the magic comes from us. Not just in terms of the training set, but in terms of a chain of actions that breathes a kind of apparent living animation into a complicated set of math models. It is not creative, or helpful, or submissive, or even in a very real way, *there.* But it’s still easy to love, because we love life, and nothing in our 2.3 billion years prepared us for simulacrum of life we’ve built.

It’s just terribly hard for people to keep that in mind when they’re talking to something that seems so much like a someone. And, in this age of social media-scaled cynicism, to remember how magical life really is.

This is the mind with which we approach our creations; unprepared to judge the simulacrum of machines of loving grace, and unaware of how amazing we really are.