Big Text Message

The Guardian today reported a story that presents a bigger issue for Brits than Americans: the NSA is capturing 200 million text messages a day and then mining their content for metadata. While the Guardian states that documents say American text messages are minimized, GCHQ does search on this information without a warrant.

The Guardian today reported a story that presents a bigger issue for Brits than Americans: the NSA is capturing 200 million text messages a day and then mining their content for metadata. While the Guardian states that documents say American text messages are minimized, GCHQ does search on this information without a warrant.

The documents also reveal the UK spy agency GCHQ has made use of the NSA database to search the metadata of “untargeted and unwarranted” communications belonging to people in the UK.

[snip]

Communications from US phone numbers, the documents suggest, were removed (or “minimized”) from the database – but those of other countries, including the UK, were retained.

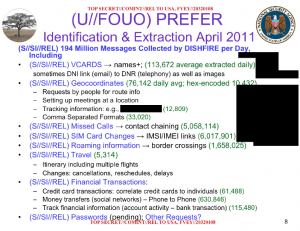

But I think the story and accompanying document is most telling for the kinds of information they’re mining in a program called PREFER. By mining the content of SMS text messages, NSA is getting information:

- Tying Emails and phone numbers together

- Tying handsets together (reflecting a SIM card being moved)

- Showing a range of location and travel information, including itineraries

- Providing limited password information

- Tracking smaller, text-based financial transactions

And all of this is tied to someone’s phone-based ID.

I’m not surprised they’re doing it. What they’re calling “Metacontent” (a lot of it involved XML tagging) is, like Metadata, more valuable than actual content (and much less of it needs to be translated.

But it provides the beginning of an explanation of how NSA is build dossiers on people around this world; it turns out their text messages may serve as one piece of glue to bring it all together.

One more point: I noticed in last year’s 702 compliance report that the number of phone targets under 702 have been going up since 2012 after having declined between 2009 and 2012 (see page 16). I’ve been wondering why that was. The rapid reliance on text messaging and the NSA’s ability to mine it more thoroughly may provide one possible explanation (though there are others).

quote:” mining the content”..unquote

Isn’t that exactly what the ruling class does?

While this doesn’t surprise me either, it significantly raises the bar on the hubris of the ruling class.

The Almighty knows when a sparrow falls from the sky; you are are worth much more than a sparrow to your NSA Fathers. Matthew 10:29 et seq. Updated.

I’m not sure why XML tagging would make it more valuable since that’s just a way to provide information about the structure of the data.

I’m also not feeling very confident that text messages in the US aren’t being collected and stored too, just maybe not in the US. It’s just a matter of time. The docs show that they were way too excited about what they can get from SMS messages. You can see how the appetite just grew and grew over the years as these programs grew.

For whatever reason, this one really hit a nerve for me. As if we didn’t know before that the intelligence community is completely out of control, this just solidified it for me once more, and even more strongly. I’m still feeling it in the pit of my stomach. These people are crazy, megalomaniacal.

I have two-pass authentication for my electronic transactions. I transfer money/pay bills, etc using my internet banking and the bank sends an SMS with an auth code to my phone. Once I enter that into the net, my transaction is completed.

Nice and secure, WRONG.

These surveillance idiots have not only undermined encryption, they’ve also undermined the bulk of two-pass authentication used by many countries to secure financial transactions. How many people in how many agencies see this information?

What a bunch of complete fucktards.

“… a bigger issue for Brits than Americans: the NSA is capturing 200 million text messages a day and then mining their content for metadata …”

The NSA mines the Brits for GCHQ and the GCHQ mines the Yanks for the NSA … and then they both claim they don’t mine ‘their own’ citizens.

Lies, lies, lies and the liars that tell them. Kill the NSA. Kill the CIA, Kill the FBI. Not the people of course, the agencies. Kill Goldman Sachs and JP Morgan, too.

We desperately need a corporate/government agency death penalty.

@Greg Bean (@GregLBean): Yes. This.

Well now we have more information about DISHFIRE. That it was used for SMS messaging was already known.

Slide 9 from the SATC slide deck published by O Globo Fantastico back on September 3rd shows the high-level process of getting from Seeds to SMS selectors into DISHFIRE. From this slide deck we learned that in addition to MAINWAY Metadata records there are also such things as MAINWAY contact chain records and MAINWAY Event records. We also became aware of the name CIMBRI in the context of contact chains but have seen nothing else about it. Note that the slide shows using only 2-hop chains. We also became aware of something called ASSOCIATION in the same context as MAINWAY Events where the SMS Selectors are dtermined/chosen but also have not seen anything else about it.

On slide 15 of the CSEC OLYMPIA presention published by the Globe and Mail on November 30th we see DISHFIRE again though its SMS context is not made apparent. However, where it appears on the slide we see that there are such things as DISHFIRE chains so we know that contact chaining occurs there as well. More so, we see not only MAINWAY contact chains and DISHFIRE contact chains but we see there are FASCIA chains and something called FASTBAT chains. Though we don’t know for sure what it is we already know what MAINWAY is, what DISHFIRE is and what FASCIA is we do know at least that FASTBAT is accessed via DNR (telephone number) selectors so that narrows it down a bit.

We also know that NSA was collecting SMS messages because in a follow up to the FASCIA reporting on December 4th the Washington Post published a document on December 10th concerning GSM Classification on page 2 item F.4 is where “Any reporting from GSM Short Message Service (SMS)” is SECRET/COMINT at a minimum and may be TOP SECRET/COMINT depending on the source. The Declassify date of 2029/11/23 should give some indication of the time frame of the latest date possible (though certainly actually earlier) on which such activity started. If they are using 25 year classification duration then we are back in 2004 at least or if a 20 year classification period we are in 2009 at least.

So far the contexts in which we have seen DISHFIRE prior to this current article have been political in the case of a Mexican political candidate (SATC slides) and economic in terms of the Brazilian Ministry of Mines (in both the SATC slides and the CSEC OLYMPIA slides). This gives rise to the proposition that DISHFIRE is a system targeted at a limited set of targets in the political and economic spectrum. Given that the relatively small number of SMS messages collected per day compared to the total volume of SMS messages daily is still a volume considerably in excess of the total number of SMS messages that could possibly be sent in a day by the alleged total of DNR selectors that the NSA queries. If we were to go by the numbers from the Bates decisions it would mean over 3000 SMS messages per day per DNR selector. Which suggests, but is by no means conclusive, that this isn’t done under FISA authority. I would hazard a guess that there is a FISA SMS collection but it would be under a different name and that FISC has no knowledge of anything called DISHFIRE. I also suspect that there is yet another named system that collects the SMS messages of everyone else.

Keep in mind that SMS messages traverse the Telephone System as it is part of Signaling System 7 (SS7) which is the name of the architecture that phone switching systems use. Cell phone traffic eventually gets from the towers into the regular phone system – it doesn’t ride on its own network – so all of this points to cooperation with the actual Telephony companies with the physical switches and wires i.e. ATT, Sprint, Verizon, etc. Any statements of surprise by them should be dismissed.

Also keep in mind that Twitter is constrained by SMS message sizes and uses SMS infrastructure to reach mobile phones. The reason why Twitter is limited to 140 characters is because SMS messages are limited to 1120 bits. At 8 bits per bytes 140 8-bit characters is 1120 bits. Why is it 160 characters on your cell phone? Because your cell phone only uses 7-bit characters for SMS messages and 160 times 7 is 1120. So there is a Twitter aspect to all of this SMS stuff as well.

@Mindrayge:quote:”.. so all of this points to cooperation with the actual Telephony companies with the physical switches and wires i.e. ATT, Sprint, Verizon, etc. Any statements of surprise by them should be dismissed.”unquote

A day late notwithstanding..these lying bastards would slit their own mothers throats for .00000000000001% profit.

Note that mysterious tree badge in the upper left hand corner is NOT the African thorn acacia, Vachellia tortilis, as people are twittering. The form factor is all wrong: top isn’t flat or cupped, branches are not coming off at 45’s. It could still be in the Acacia genus however. For some reason however, it was a noteworthy representative of the presenters’ environment (eg arid region).

The odd thing about the tree is the complete lack of lettering on the perimeter. That would be very unusual for an NSA branch badge. This is a poorly composed field photograph with murky background sky, distracting patch of grass and muddled horizon — not a vector graphic design in Adobe Illustrator like all the others. Not remotely corporate: ugly, unsuitable as brand logo, doesn’t reproduce well on letterhead or packaging.

Given an image, google allows ‘search for similar images’. That’s not a text search but on properties of the image itself (think facial recognition). The vast majority of NSA imagery is swiped off the internet, eg the PRISM logo. However searching on acacia does not recover this photo.

The presenters here were not T1221 Center for Content Extraction nor T132 Dishfire — they are just thanked as funder and collaborators. Some entity outside of NSA was funded here by T1221. We’ve seen Booz Hamilton collaborating on slide production but that was not redacted. For some reason, everything about the presenters had to be redacted.

Being sure to acknowledge everyone and thanking funders — that would be typical of an academic not claiming credit for the work of others and brown-nosing for the next grant installment. After all, the project here is advanced semantic processing of massive content in multiple languages (primarily Arabic) for cleanly structured metadata that stitches together traditionally hoovered metadata (ie fills in missing fields, uniting separate records).

Now look at the backdrop on the first slide. That is a photo too. It shows a tree, not necessarily the same species, as the sole vegetation in the bottom of a boulder-filled arroyo (Arabic: wahdi ارويو) or steep bajada (أصل) run-out with a dark ridge in the background. The large size and poor sorting of the boulders establish this as an extremely arid region subject to rare flash floods.

So you are talking Yemen, Somalia or Negev desert or drone operations here. That’s the reason, at this late date, for blacking out the contractors.

Just to put some context to the 200 million number: Forrester Research estimated in 2012 that, in the US alone, there were 6 billion SMS messages sent each day: http://blogs.forrester.com/michael_ogrady/12-06-19-sms_usage_remains_strong_in_the_us_6_billion_sms_messages_are_sent_each_day .

Just to expand on the excellent comments by mindrayge;

NSA does nothing on its own dime, there has to be a ‘customer’ per the corporate business model imposed by Eagle Alliance. Ask yourself, who would put in the NSRL (National SIGINT Requirements List) demand on Petrobras? On the last Olympia slide, we see that Canada’s CSEC (eg, all five FVEY) can represent a customer wanting TAO firewall implants and two phone hacks (the Nokia 3120 and Motorola BackFlip MB300, mistakenly called a MRUQ7-3334411C11) on Brazil’s Mining Minerals Energy.

If this seems overly generous of NSA, remember that an energy or mining company headquartered in Canada will still US-owned in terms of its capital structure. They’re just in CAN for the slack regs on the Toronto exchange.

Here is a tear-down of the key slide:

The algorithm shows contact chaining for Brazil’s MME on both the DNI and DNR side, mostly the latter. The process is initialized from a small plaintext file of initial selectors (CSV comma separated values, records separated by carriage returns) which is reconfigured to a standardized database format with administrative oversight (front door rules: legal and policy justifications for collection) before being passed to the thin client of the analyst. This is the only appearance of ‘Justification’ in the Olympia slide set.

Another field is added ‘SelectorRealm’. Realm is explained in the MonkeyPuzzle memo as divisions of a large database (emailAddrm, google, msnpassport, yahoo). In building a targeting request, realm occurs as https://gamut-unvalidated.nsa/utl/UTT/do/RealmSelector#selector In the context of Merkel’s cell phone intercept, ‘RealmName: rawPhoneNumber’ appeared. Realm in the Olympia slide specify a subset of collection SIGADS. Thus this step is narrowing the field of inquiry by adding a realm field to the input records to restrict subsequent processing to that realm.

The records are now filtered by their DNR (telephony) selectors in an unspecified manner. The fork meeting filter conditions is expanded by DNI (internet) chaining via unspecified databases (web email contacts possibly being the realm) and using one hop (see below) for output to Tradecraft Navigator. The fork of records failing to meet filter conditions is discarded.

The other fork meeting filter conditions, after specifying date ranges etc, is sent out to be expanded DNR contacted chaining. This enrichment step is quite instructive: it involves four DNR database (FASTBAT, DISHFIRE, FASCIA, MAINWAY). FASTBAT must be partially non-redundant with respect to the others or it would make no sense to include it. It is possibly a SIGAD specific to Brazil or South America, possibly CSEC collection at the Canadian Embassy in Brasilia (the other three are NSA).

The FASTBAT popup entitled ‘Mapping (execute sub-transformations)” does not have either checkbox activated (“Allow multiple ‘Mapping Input’ steps in the sub-transformation” and/or “Allow multiple ‘Mapping Output’ steps in the sub-transformation”) suggest that this enrichment at least was just one hop. Field names could have been renamed here but tc-selectors and tc_date_range were retained.

It would be amazing if this contact-chaining step did not take overnight (or at least involve long latency) — these databases contain many trillions of records and NSA could be running thousands of multi-hop contact-chaining requests simultaneously for hundreds analysts spread across Five Eyes. It’s not clear whether NSA’s move to the cloud will expedite such searches or break algorithms such as this for whom the haystack has gotten too large.

Because of how realms, date ranges, country of call origin etc were initially specified, not all records produced by contact chaining having any data left in the fields of interest. (It is very common for some fields to be blank in database records, eg the lat/long of a new cell tower hasn’t yet been established or fully propagated out, yet calls to it are being collected.) These empty records are discarded so they don’t contribute rubbish to the output.

After renaming records for consistent output, the records are sorted by an important field (eg MSISDN phone number) and split, with one fork going to summary statistics (how many records had a given value for the fixed field), as seen by the capital greek letter Sigma (symbol for sum in math) in the ‘Group by’ icon. These are likely sorted to highest frequency order.

The other fork simply outputs all the records to Tradecraft Navigator, which may have its own social networking visualization tool or just pass it on to RENOIR. The original presentation may have contained a sample of output but if so, Greenwald may not have included it or if he did, the Globe and Mail didn’t publish it.

Have to agree joanneleon here, we know from the job ads and LinkedIn security breaches that they use JSON over XML for storing stolen address books. XML is way bulky, bales of hay are falling off the truck now despite a new cloud data center in Bluffdale — but far worse is latency (analyst standing at water cooler as wheels spin).

XML in our documents to date:

A JSON (JavaScript Object Notation) address book works just like an LDAP address book, but instead of using the LDAP protocol, it makes an AJAX/JSON request over HTTP to get the address/contact data. JSON is a data storage language a bit like XML but uses a more familiar “dictionary” style syntax and semantics.

JSON is a text format that is completely language independent but uses conventions that are familiar to programmers of the C-family of languages, including C, C++, C#, Java, JavaScript, Perl, Python, and many others. These properties make JSON an ideal data-interchange language. More information at json.org.

For example, to store an address book in JSON:

[{“Name”: “Jane”, “Address”: “28 Seventh St”, “Age”: 27},

{“Name”: “Steve”, “Address”: “14 Ninth St”, “Age”: 25}

]

Notice that it looks a lot like a Python or JavaScript dictionary and list syntax. That’s basically all it is: a serialisation of these six basic data types:

Object (or a “dictionary”)

Array (or a “list”)

String

Number (integer and float)

Boolean (true and false)

null

Desired Skills: 3 years of prior Agency experience is preferred. Experience with data encapsulation methods including XML, JSON is preferred

Otherwise XML came up in EgotisticalGiraffe: that exploited a ‘type confusion’ vulnerability in E4X, which is an XML extension for Javascript. This vulnerability exists in Firefox 10.0 – 16.0.2 (the former the version used in the Tor browser bundle). According to another document, the vulnerability exploited by EgotisticalGiraffe was inadvertently fixed when Mozilla removed the E4X library with the vulnerability, and when Tor added that Firefox version into the Tor browser bundle, but NSA were confident that they would be able to find a replacement Firefox exploit that worked against version 17.0 ESR.

@Jan17th: Excellent break down. I believe that the Olympia presentation was the most informational and under discussed release so far.

I suspect the query performance or latency largely depends on how well they constructed the Question Focused Datasets (QFDs) and on what mediums they are stored. It appears from the one slide in the DISHFIRE slides that there exists a cloud containing QFDs (called MILKBONE) of which the DISHFIRE QFDs are a subset. If they properly distribute the QFDs across the nodes and run simultaneous queries (or near simulataneous queries) across the nodes they should achieve decent performance overall across all the different queries submitted by analysts or autonomously by systems. I expect the QFDs take several forms among them being bit streams of millions of zero bits and a much smaller amount of one bits and massively parallel computers can rapidly do their AND, OR, XOR etc bit twiddling rather quickly. I expect they are also have QFDs that are nothing more than coefficients for polynomial equations that produce polyhedral convex sets and all of the rest of the Linear Algebra/Programming and matrix stuff. There are no doubt binary search QFDs in the simplest sense. That said, there is no doubt prioritization of queries and some of them easily could run for days while time sensitive ones come back quickly. I expect that something like the queries depicted on slide 15 of OLYMPIA it would be hours at best if not days only because it is target development of an economic target in that specific instance.

This is going back almost 23 years now but one of the subsidiary companies of the company I worked for (in their Strategic Architecture group) had a 14 dimension database (in math terms a hyperspace) that used the bit twiddling approach by building what would be a QFD of ones and zeros identifying what ordinal record (by bit position) would be responsive to a particular query and after identifying all the records across all of the various databases that held them queries would be submitted to those systems containing the actual data. All of this was custom built running across a dozen mainframes in parallel. I can’t imagine the NSA doing anything less than that. Of course, if they are doing it all wrong and they have records coming across the wire from storage nodes to query processing nodes then their query process would run at network speed at best which is the slowest possible processing environment short of most of the data actually being on tape rather than disk. Though I also think there is a shit ton of data on tapes so for some queries knowing how many records would be responsive is much faster than the actual return of data.

We know that QFDs are present in the case of DISHFIRE and also in the case of FASCIA. Likely they exist for MAINWAY, MARINA, and a bunch of other systems.

@bloodypitchfork: The ruling class mines CASH. The last 40 years have been an accelerating extraction operation.