Elon Musk’s Machine for Fascism: A One-Stop Shop for Disinformation and Violence

Just over a year ago, I described how Twitter had been used as a way to sow false claims in support of Trump in 2016 and 2020.

I described how, in 2016, trolls professionalized their efforts, with the early contribution of Daily Stormer webmaster Andrew “Weev” Auernheimer. I quoted testimony from Microchip, a key cooperating co-conspirator at Douglass Mackey’s trial, describing how he took unoffensive content stolen from John Podesta and turned it into a controversy that would underming Hillary Clinton’s chances.

Q What was it about Podesta’s emails that you were sharing?

A That’s a good question.

So Podesta ‘s emails didn’t, in my opinion, have anything in particularly weird or strange about them, but my talent is to make things weird and strange so that there is a controversy. So I would take those emails and spin off other stories about the emails for the sole purpose of disparaging Hillary Clinton.

T[y]ing John Podesta to those emails, coming up with stories that had nothing to do with the emails but, you know, maybe had something to do with conspiracies of the day, and then his reputation would bleed over to Hillary Clinton, and then, because he was working for a campaign, Hillary Clinton would be disparaged.

Q So you’re essentially creating the appearance of some controversy or conspiracy associated with his emails and sharing that far and wide.

A That’s right.

Q Did you believe that what you were tweeting was true?

A No, and I didn’t care.

Q Did you fact-check any of it?

A No.

Q And so what was the ultimate purpose of that? What was your goal?

A To cause as much chaos as possible so that that would bleed over to Hillary Clinton and diminish her chance of winning.

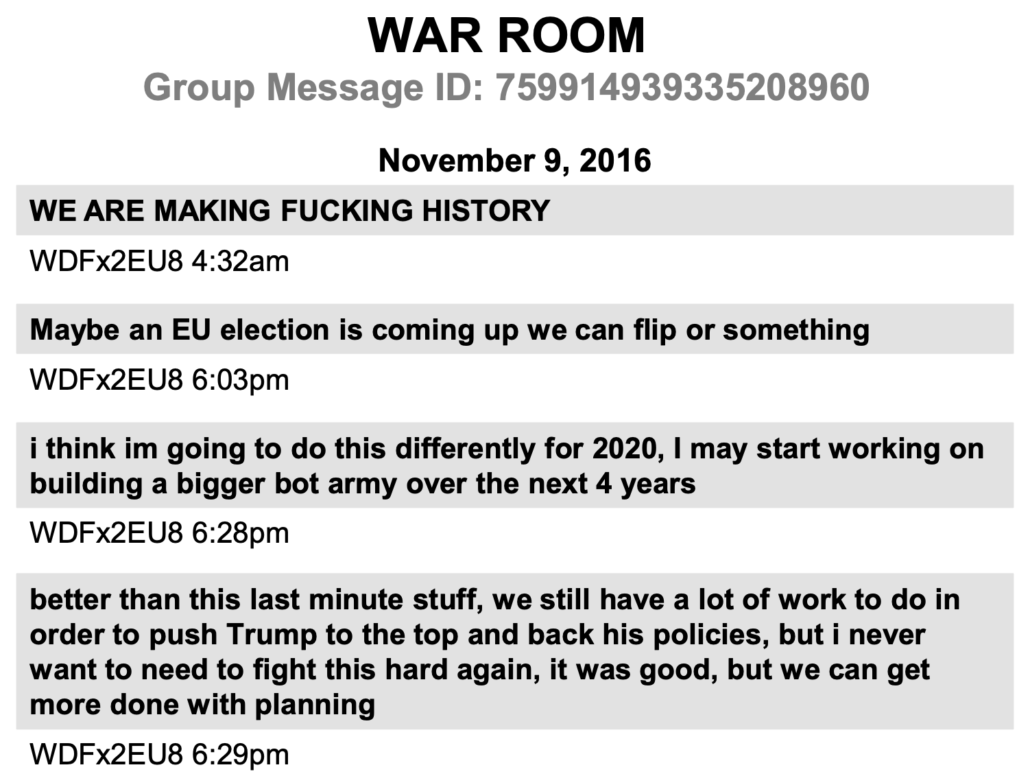

After Trump won, the trolls turned immediately to replicating their efforts.

Microchip — a key part of professionalizing this effort — declared, “We are making history,” before he immediately started pitching the idea of flipping a European election (as far right trolls attempted with Emmanuel Macron’s race in 2017) and winning the 2020 election.

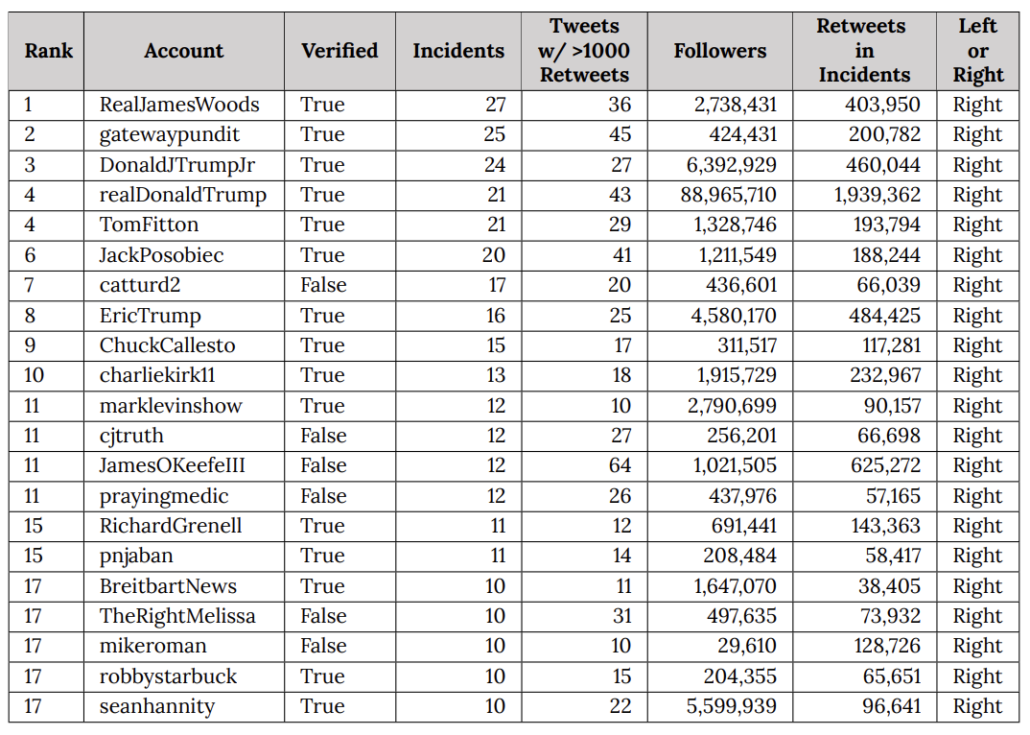

They did replicate the effort. That same post described how, in 2020, Trump’s role in the bullshit disinformation was overt.

Trump, his sons, and his top influencers were all among a list of the twenty most efficient disseminators of false claims about the election compiled by the Election Integrity Project after the fact.

While some of the false claims Trump and his supporters were throttled in real time, almost none of them were taken down.

But the effort to throttle generally ended after the election, and Stop the Steal groups on Facebook proliferated in advance of January 6.

To this day, I’m not sure what would have happened had not the social media companies shut down Donald Trump.

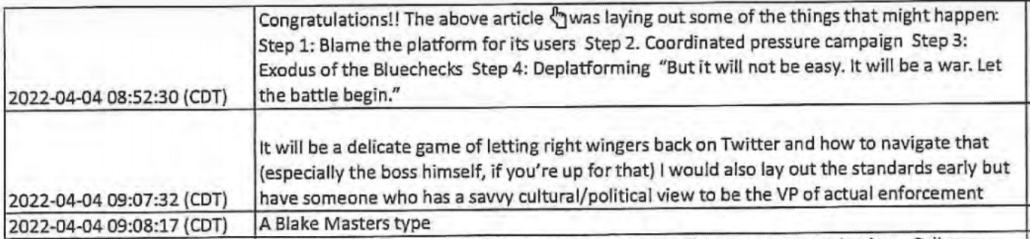

And then, shortly thereafter, the idea was born for the richest man in the world to buy Twitter. Even his early discussions focused on eliminating the kind of moderation that served as a break in 2020. During that process, someone suspected of being Stephen Miller started pitching Elon Musk on how to bring back the far right, including “the boss,” understood to be Trump.

Musk started dumping money into Miller’s xeno- and transphobic political efforts.

Once Musk did take over Xitter, NGOs run by far right operatives, Republicans in Congress, and useful idiots coordinated to undercut any kind of systematic moderation.

As I laid out last year, the end result seemed to leave us with the professionalization and reach of 2020 but without the moderation. Allies of Donald Trump made a concerted effort to ensure there was little to hold back a flood of false claims undermining democracy.

Meanwhile, the far right, including Elon, started using the Nazi bar that Elon cultivated to stoke right wing violence here on my side of the pond, first with targeted Irish anti-migrant actions, then with the riots that started in Southport. I’ve been tracing those efforts for some time, but Rolling Stone put a new report on it out, yesterday.

Throughout, the main forum where right-wing pundits and influencers stoked public anger was X. But a key driver of the unrest was the platform’s owner himself, Elon Musk. He would link the riots to mass immigration, at one point posting that “civil war” in the U.K. was inevitable. He trolled the newly elected British prime minister, Keir Starmer — whose Labour Party won power in July after 14 years of Conservative rule — for supposedly being biased against right-wing “protesters.” After Nigel Farage, the leader of radical-right party Reform U.K. and Trump ally, posted on X that, “Keir Starmer poses the biggest threat to free speech we’ve seen in our history,” Musk replied: “True.”

Anything Musk even slightly interacted with during the days of violence received a huge boost, due to the way he has reportedly tinkered with X’s algorithm and thanks to his 200 million followers, the largest following on X. “He’s the curator-in-chief — he’s the man with the Midas touch,” says Marc Owen Jones, an expert on far-right disinformation and associate professor at Northwestern University in Qatar. “He boosted accounts that were contributing to the narratives of disinformation and anti-Muslim hate speech that were fueling these riots.”

Elon Musk, the richest man in the world, one of Trump’s most gleeful supporters, someone with troubling links to both China and Russia, has set up a one-stop shop: Joining false claims about the election with networks of fascists who’ll take to the streets.

With that in mind, I want to point to a number of reports on how disinformation has run rampant on Xitter.

The Center for Countering Digital Hate (one of the groups that Elon unsuccessfully sued) released a report showing that even where volunteers mark disinformation on Xitter, those Community Notes often never get shown to users.

Despite a dedicated group of X users producing accurate, well-sourced notes, a significant portion never reaches public view. In this report we found that 74% of accurate Community Notes on false or misleading claims about US elections never get shown to users. This allows misleading posts about voter fraud, election integrity, and political candidates to spread and be viewed millions of times. Posts without Community Notes promoting false narratives about US politics have garnered billions of views, outpacing the reach of their fact-checked counterparts by 13 times.

NBC described Elon’s personal role in magnifying false claims.

In three instances in the last month, Musk’s posts highlighting election misinformation have been viewed over 200 times more than fact-checking posts correcting those claims that have been published on X by government officials or accounts.

Musk frequently boosts false claims about voting in the U.S., and rarely, if ever, offers corrections when caught sharing them. False claims he has posted this month routinely receive tens of millions of views, by X’s metrics, while rebuttals from election officials usually receive only tens or hundreds of thousands.

Musk, who declared his full-throated support for Donald Trump’s presidential campaign in July, is facing at least 11 lawsuits and regulatory battles under the Biden administration related to his various companies.

And CNN described how efforts from election administrators to counter this flood of disinformation have been overwhelmed.

Elon Musk’s misinformation megaphone has created a “huge problem” for election officials in key battleground states who told CNN they’re struggling to combat the wave of falsehoods coming from the tech billionaire and spreading wildly on his X platform.

Election officials in pivotal battleground states including Pennsylvania, Michigan and Arizona have all tried – and largely failed – to fact-check Musk in real time. At least one has tried passing along personal notes asking he stop spreading baseless claims likely to mislead voters.

“I’ve had my friends hand-deliver stuff to him,” said Stephen Richer, a top election official in Arizona’s Maricopa County, a Republican who has faced violent threats for saying the 2020 election was secure.

“We’ve pulled out more stops than most people have available to try to put accurate information in front of (Musk),” Richer added. “It has been unsuccessful.”

Ever since former President Donald Trump and his allies trumpeted bogus claims of election fraud to try to overturn his loss to Joe Biden in 2020, debunking election misinformation has become akin to a second full-time job for election officials, alongside administering actual elections. But Musk – with his ownership of the X platform, prominent backing of Trump and penchant for spreading false claims – has presented a unique challenge.

“The bottom line is it’s really disappointing that someone with as many resources and as big of a platform as he clearly has would use those resources and allow that platform to be misused to spread misinformation,” Michigan Secretary of State Jocelyn Benson told CNN, “when he could help us restore and ensure people can have rightly placed faith in our election outcomes, whatever they may be.”

Finally, Wired explained how, last week, Elon’s PAC made it worse, by setting up a group of 50,000 people stoking conspiracy theories.

For months, billionaire and X owner Elon Musk has used his platform to share election conspiracy theories that could undermine faith in the outcome of the 2024 election. Last week, the political action committee (PAC) Musk backs took it a step further, launching a group on X called the Election Integrity Community. The group has nearly 50,000 members and says that it is meant to be a place where users can “share potential incidents of voter fraud or irregularities you see while voting in the 2024 election.”

In practice, it is a cesspool of election conspiracy theories, alleging everything from unauthorized immigrants voting to misspelled candidate names on ballots. “It’s just an election denier jamboree,” says Paul Barrett, deputy director of the Center for Business and Human Rights at New York University, who authored a recent report on how social media facilitates political violence.

[snip]

Inside the group, multiple accounts shared a viral video of a person ripping up ballots, allegedly from Bucks County, Pennsylvania, which US intelligence agencies have said is fake. Another account shared a video from conspiracy theorist Alex Jones alleging that unauthorized immigrants were being bussed to polling locations to vote. One video shared multiple times, and also purportedly from Buck County, shows a voter confronting a woman with a “voter protection” tag on a lanyard who tells the woman filming that she is there for “early vote monitoring” and asks not to be recorded. Text in the accompanying post says that there were “long lines and early cut offs” and alleges election interference. That post has been viewed more than 1 million times.

Some accounts merely retweet local news stories, or right-wing influencers like Lara Loomer and Jack Posobiec, rather than sharing their own personal experiences.

One account merely reshared a post from Sidney Powell, the disgraced lawyer who attempted to help Trump overturn the 2020 election, in which she says that voting machines in Wisconsin connect to the internet, and therefore could be tampered with. In actuality, voting machines are difficult to hack. Many of the accounts reference issues in swing states like Pennsylvania, Michigan, and Wisconsin.

This latter network includes all the same elements we saw behind the riots in the UK — Alex Jones, Trump’s fascist trolls, Russian spies (except Tommy Robinson, who just got jailed on contempt charges).

Now, in my piece last year, I suggested that Elon has diminished the effectiveness of this machine for fascism by driving so many people off of it.

The one thing that may save us is that this Machine for Fascism has destroyed Xitter’s core value to aspiring fascists: it has destroyed Xitter’s role as a public square, from which normal people might find valuable news. In the process, Elmo has destroyed Twitter’s key role in bridging from the far right to mainstream readers.

Maybe that’s true? Or maybe by driving off so many journalists Elon has only ensured that journalists have to go look to find this stuff — and to be utterly clear, this kind of journalism is some of the most important work being done right now.

But with successful tests runs stoking far right violence in Ireland and the UK, that may not matter. Effectively, Elon has made Xitter a massive version of Gab, a one-stop shop from which he can both sow disinformation and stoke violence.

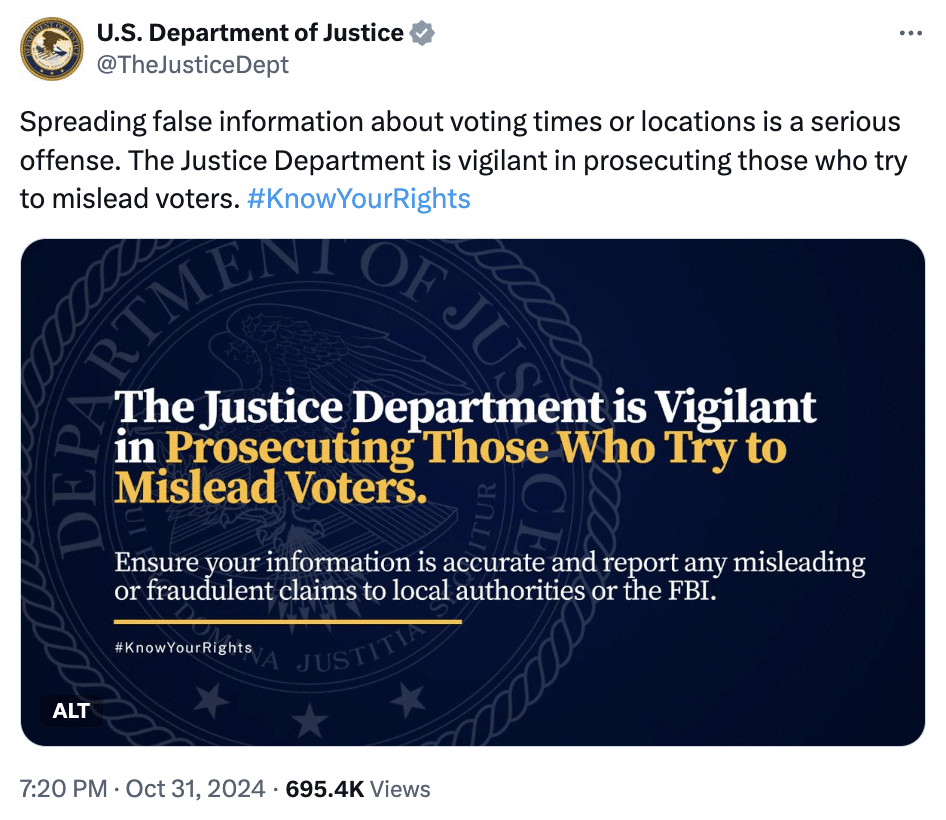

On a near daily basis, DOJ issues warnings that some of this — not the false claims about fraud and not much of the violent rhetoric, but definitely those who try to confuse voters about how or when to vote — is illegal.

NBC describes that election officials are keeping records of the corrections they’ve issued, which would be useful in case of legal cases later. What we don’t know is whether DOJ is issuing notices of illegal speech to Xitter (they certainly did in 2020 — it’s one of the things Matt Taibbi wildly misrepresented), and if so, what they’re doing about it.

I am, as I have been for some time, gravely alarmed by all this. The US has far fewer protections against this kind of incitement than the UK or the EU. Much of this is not illegal.

Kamala Harris does have — and is using — one important tool against this. Her campaign has made a record number of contacts directly with voters. She is, effectively, sidestepping this wash of disinformation by using her massive network of volunteers to speak directly to people.

If that works, if Harris can continue to do what she seems to be doing in key swing states (though maybe not Nevada): getting more of her voters to the polls, then all this will come to a head in the aftermath, as I suspect other things may come to a head in the transition period, assuming Harris can win this thing. In a period when DOJ can and might act, the big question is whether American democracy can take action to shut down a machine that has been fine-tuned for years for this moment.

American law and years of effort to privilege Nazi speech have created the opportunity to build a machine for fascism. And I really don’t know how it’ll work out.

Update: Thus far there have been three known Russian disinformation attempts: a false claim of sexual abuse targeted at Tim Walz, a fake video showing votes in Bucks County being destroyed, and now a false claim that a Haitian migrant was voting illegally in Georgia.

This statement from Raffensperger, publicly asking Musk to take it down, may provide some kind of legal basis to take further steps. That’s the kind of thing that is needed to get this under control.

Update: I meant to include the Atlantic’s contribution to the reporting on Musk’s “Election Integrity Community;” it’s a good thing so many people are focused on Elon’s efforts.

Nothing better encapsulates X’s ability to sow informational chaos than the Election Integrity Community—a feed on the platform where users are instructed to subscribe and “share potential incidents of voter fraud or irregularities you see while voting in the 2024 election.” The community, which was launched last week by Musk’s America PAC, has more than 34,000 members; roughly 20,000 have joined since Musk promoted the feed last night. It is jammed with examples of terrified speculation and clearly false rumors about fraud. Its top post yesterday morning was a long rant from a “Q Patriot.” His complaint was that when he went to vote early in Philadelphia, election workers directed him to fill out a mail-in ballot and place it in a secure drop box, a process he described as “VERY SKETCHY!” But this is, in fact, just how things work: Pennsylvania’s early-voting system functions via on-demand mail-in ballots, which are filled in at polling locations. The Q Patriot’s post, which has been viewed more than 62,000 times, is representative of the type of fearmongering present in the feed and a sterling example of a phenomenon recently articulated by the technology writer Mike Masnick, where “everything is a conspiracy theory when you don’t bother to educate yourself.”

This in a way overlaps Ed’s post of “Do you trust the media?” X(Twitter) is media, and was biased under Dorsey to where a (past?) contributor to EW got removed for not talking pretty. Lack of political correctness. Dorsey now is farting around with crypto.

He was not the gold standard. Musk is different, but the pendulum has swung, at its fastest point passing equilibrium.

“I am, as I have been for some time, gravely alarmed by all this. The US has far fewer protections against this kind of incitement than the UK or the EU. Much of this is not illegal.” Ding ding ding! Thank you for this post.

TFG is Musk’s useful idiot, not the other way around, imo.

I don’t think either of them are useful. Definitely idiots, however!

They are both useful idiots from Putin’s POV IMHO.

yes, this is the more salient point

That I definitely agree with!

The problem is the cadence mismatch: possibly illegal overt actions appearing 4-6 months prior to election day; prosecutorial responses appearing 1-2 years after election day. MAGA strategy (possibly tutored by KGB-trained Putin, and translated into action by Cohn-tutored Trump) has effectively exploited this mismatch.

How to deal with this issue in a democracy with free speech protections?

The President could declare Xitter to be a threat to the nation. Is it too late now? Maybe. All we can do is hope that Harris can prevail, and I think this hope is reasonable.

Why not shut it down? There are plenty of outlets for free speech. There is no constitutional right to broadcast on Xitter or Facebook. Yes, there will be lawsuits and pushback. Maybe it’s a dice roll with the Supreme Court.

But the main point is that it changes the cadence mismatch. Now MAGA has to prove they have a right to Xitter. Xitter has to scramble to stay in business. Negotiations will follow. Maybe the FTC will step in. Maybe something like UK/Germany protocols will result.

This could be done at any time.

There are about 100 things wrong, legally, with your argument, starting with “This could be done at any time.” If you want to fight fascism, you need to talk about what is real, not what you think should be real.

I also think there are steps in place to address what you call a cadence mismatch.

Thanks for responding – I’m glad to be told I’m wrong, it helps me to stop spreading misinformation and to align my point of view on what is real.

On the cadence mismatch, what sorts of steps are you thinking about?

For one, some leaked Cleta Mitchell’s plans to NYT, so the kinds of things that took years for J6C and DOJ to collect are identified in public form this year.

For another, given the charges already against Trump, AFTER the election, DOJ would easily be able to argue that Trump was engaged in similar activity and no longer had a campaign claim to this stuff.

There are other steps Smith might take but on those we can wait and see.

“(Y)ou need to talk about what is real, not what you think should be real.”

Wait; so does this mean you don’t believe a demon clawed Tucker Carlson in his sleep? Surely you don’t think one of his 4 dogs in the bed did that!

“Maybe something like UK/Germany protocols will result.

This could be done at any time.”

I highly doubt it. The current positions of German domestic law, U.K. domestic law and the ECHR jurisprudence on free speech (which grants a wide margin of appreciation of the application of Article 10 to domestic legislators and courts) are situations which have evolved over time, and reflect a socio-politico-legal culture unique to each despite communalities.

Eg commentary on court proceedings in both U.K. and Germany is highly restricted in general, and in the U.K. where there are interrelated criminal conspiracy cases with sequential trials for groups of defendants, the courts can ban publication of details of evidence in the earlier trials until the conclusion of the final trial.

One of Tommy Robinson’s convictions was for criminal contempt for the breach of such an order. He was using the trails of grooming gangs as part of his anti-Muslim agitation.

Non-Adult defendants are not named publicly until they reach adulthood or the trial concludes. At the conclusion of a trial of a juvenile, a Judge has a discretion to allow them to be named if satisfied there is an overwhelming public interest in doing so. These are non-negotiable standard of due process for children.

In the Southport case, the false identification of the mentally disturbed juvenile perpetrator broke the law in two respects: 1 contempt of court for purporting to name a juvenile defendant, 2 inflaming religious and or racial hatred by purporting that the perpetrator had an ostensibly Muslim name.

I mention these particulars because I think they illustrate how different U.K. law is. While I believe the US may benefit from reevaluating how to interpret and justify limits on free speech, learning from U.K. German or other ECHR signatories involves being prepared for a culture shock.

LOL. You write as if you were a troll or a closet Trumpist. None of what you propose is possible under US law, at least until Donald Trump guts it.

Well, perhaps a closet try-to-anticipate-what-MAGA-might-do-and-mouth-absurd-ideas-for-how-to-counter sort of person. Not a troll.

I’m beginning to think someone could start a boutique law firm specializing in various kinds of aggressive anti-defamation work, with a side-order of injury claims against those who are currently pushing the idea of civil war with every tweet/post they make, and enjoy some very nice profits.

This assumes our country survives the next elections, of course. I hope someone is quietly bringing the National Guard to full readiness and making sure that local law enforcement has been brought into line with the current, federal anti-coup policies (whatever those might be, if they exist… *Sighs*)

It would be a firm like Marc Elias becoming the go-to lawyer for election fraud claims.

As for the National Guard, the local commanders can do all the prep work they want to get to full readiness, and local law enforcement can do the same, but until their governor (or in DC’s case, the president/Sec Def) activates the NG, all they can do is stand by and wait. See Jan 6 . . .

On standby I guess is one step more than standing by and as much a warning to MAGA fanatics as an expectation of severe unrest.

“National Guard on standby in several states as a precaution for potential election unrest”

https://edition.cnn.com/politics/live-news/trump-harris-election-11-02-24#cm30gm077008u3b6pozi75re9

This is sure to rock someone’s fantasy world

“…side-order of injury claims…” thousands of potential paper cuts

The firm that won Ruby Freeman Rudy Giuliani’s apartment is doing much of that. They first started trying with Seth Rich’s family, but unsuccessfully.

If I had a hundred million dollars I’d finance a liberal law firm clobbering conservatives who lie and slander. It would make big bucks and change politics nicely!

I had an interesting discussion with ChatGPT about this, and have posted a link below.

I asked if X actually influences elections and if so, what can be done. ChatGPT came up with some interesting answers.

[link removed]

[Moderator’s note: Do not use ChatGPT or other generative AI in comments at this site — no link to output, no cut-and-pasting into a comment, no scraping this site using generative AI. /~Rayne]

Scary, very scary. How to combat the richest man in the world holding his thumb on the scale for Trump?

I did ask ChatGPT-4o for some answers, but my link was moderated away, so I suggest that others can ask the same questions.

(I use ChatGPT-4o in my work, which occasionally requires solving technical and informational problems that I have never solved before, so I have developed a good relationship with it.)

Here are the questions that I asked:

“How much actual influence does X (twitter) have on elections?”

“How can misinformation on X be mitigated?”

“What if the owner of X wants to use misinformation to affect the outcome of the presidential election? Can he be stopped?”

ChatGPT did provide answers to these questions, some very interesting.

Interesting, perhaps, but uncheckable as to veracity, the quality of the sources they used, etc.

Sorry, but I’ll stick to actual people who show their work.

You are NOT going to do this shit at this site.

Every time generative AI is used it relies on content it obtained non-consensually, and at great expense to the environment due to the amount of CO2 it generates.

Do this again and you’re banned. I don’t give a flying fuck what your workplace requires though I hope to fuck we legislate controls on AI under a Harris administration so you stop contributing so carelessly to the climate crisis while STEALING IP FROM SITES LIKE THIS ONE.

yesssss captures my sentiments exactly. I can’t believe we’ve decided to reverse any progress we’ve made on energy efficiency as a society for such phenomenally trivial, if not downright harmful, uses. I wish my supervisor understood this.

I just learned today how to change my web browser default search engine to search Google without the AI results using “udm=14” – sharing in case anyone’s interested:

https :// tedium. co/2024/05/17/google-web-search-make-default/

[Moderator’s note: link has been “broken” with blank spaces to prevent accidental click through. Community members should use at their own risk as the site has not been vetted. /~Rayne]

Thanks, but I know nothing about that site.

I used Tom’s Hardware as one source:

https://www.tomshardware.com/software/google-chrome/bye-bye-ai-how-to-block-googles-annoying-ai-overviews-and-just-get-search-results

Thanks! and thanks for the Tom’s Hardware link.

A great policy. With generative learning models that rely on scraping internet sites it’s a classic case of garbage in, garbage out. A disgusting example of AI repeatedly using debunked race science for responses:

https://www.wired.com/story/google-microsoft-perplexity-scientific-racism-search-results-ai/

“AI-infused search engines from Google, Microsoft, and Perplexity have been surfacing deeply racist and widely debunked research promoting race science and the idea that white people are genetically superior to nonwhite people.”

This incident, in a nutshell, exemplifies the challenge of generative AI apart from its theft of humans’ original creative works:

Don’t even get me started on other forms of bias embedded in generative AI to date. It doesn’t even collect published information about the subject as it could have by scraping information about Chanel. Instead it conjugates a guess based on a collected body of material about which we have zero knowledge.

Which is a critical reason why generative AI should not be used in comments here. We deal in reality, in hard facts, not a biased collection system’s aggregated materials polled to produce a guess.

Meanwhile, the dead bird app is awash with AI crap which is intended to fuck with perception — and that output in turn becomes more material which will be fed into the body of data generative systems will use if corporations do not use more discernment than they have as Microsoft demonstrates.

Elmo is just fine with that.

I had two weeks in Glacier NP this summer and I stopped using the platform in mid July but left my account up. I had decided that I wasn’t changing any minds and it lost its appeal as a news aggregator. I have since put my efforts into a more local focus and have used the information gleaned from this site to call into Wyoming’s right wing radio to point out to the host how he was manipulated into reporting on stories based on disinformation from foreign adversaries. Much of his audience brays for the host to hang up or not have me on, but he is adamant that hearing what the other side is thinking is useful to counter liberal’s arguments.

I am torn about this approach as some argue that getting the right worked up gets them out to vote, but I have continued to argue that disengaging from one group of my local neighbors since the rise of Limbaugh and Fox News has been a failure of the left.

Due to the checks and balances incorporated into our governance, I realize that I have to lose my Wyoming Democrat Senator, Representative, County Attorney and Sheriff in order for things to get really dicey in Laramie. If those things do occur over the next 2 years then all the protections to hold the christian nationalist state legislature at bay will dissolve.

So what is the answer? Engage on Twitter, leave it dormant or some other solution?

If you can afford it, move to Jackson! But seriously, I share your trepidation as the Wyoming governance is really quite deplorable (and that’s with everyone saying “Gordon is one of the better ones”!). I have serious doubts that the winds of change will blow down our way during our lifetime (or at least my remaining time), as Democrats have been pushed to the very fringes of state government now for years.

I might be the last person to think of this, but inviting the people who are already communicating with other voters directly to continue communicating after the election/January 6/January 20 seems like the most obvious thing in the world.

We beg “people who have a platform” to say things – why not keep a progressive platform in place? It’s not about winning presidential elections, or even about elections. It’s about making actual progress, every day.

I think Biden finally figured out that the media were not helpful – that’s why he froze them out when he announced he was dropping out of the race. One hopes Harris has absorbed that lesson!

Continuing communication after the election is the mistake Obama and the DNC (under Tim Kaine as chair) made after 2008. By allowing OFA to lapse, they were unable to stem the firehose of crap organized and disseminated by the Koch-funded Tea Party.

The failure to continue dialog using the OFA network also created a vacuum in which the racist backlash against the first Black president could foment.

This is also one of the challenges Harris may already be preparing for with her outreach to never-Trump and other disaffected GOP voters.

So true, Rayne. Obama’s first term seems like ancient history now to most, but it was a critical period during which the frameworks and. momentum for the current anti-democratic wave were being generated. My research involved deep dives into the political forces influencing the disposition of high-profile murder cases around the country; over and over, it seemed, I would lift a rock and discover a network–racist, reactionary, deeply conspiracist–in the process of forging itself into the current movement, especially in Texas and other blood-red areas. The links between “grassroots” hate and institutional power, even early on, were the real revelation for me.

Given that this wave (once Tea Party, now MAGA) has captured so many of our neighbors, we will be reckoning with it for a long time. It significantly predates Trump, who opportunistically rode its crest to power and then inflamed it for his own gain.

I wonder what can be done on the state level, considering the level of importance that the actions of individual states will have in the event of a Trump victory. Could Vermont, for example, ban Twitter within its borders much in the way it geofences its ban on internet gambling?

Greg Hunter: “…So what is the answer? Engage on Twitter, leave it dormant or some other solution?”

Or does any participation on Xtter contribute to it?

Perhaps he will pay a “price” after Kamala wins. I do not think it would be Garland doing it least of all because I doubt he will be KH’s AG.

Then there are SpaceX contracts and the improbable fair value takeover of it by the US Government due to its national security impact. Like I said (very) improbable.

He does need to pay a price though.

The last time I did this calculation, the Falcon Heavy put a payload into orbit at about one-tenth the cost of any other system. That’s a very big thing. Competitors show few signs of catching up. That is not where I would look to snap the reins on Musk.

Not that I don’t think we should try to do it. I do. AND, I like the space program and SpaceX’s contribution to it.

Also, I think there’s probably plenty of other vulnerabilities that Musk has scattered about the landscape, since he’s nearly as prolific with it as he is with his sperm.

Interesting article with excellent research and analysis as always! Great information!

I’ve always been concerned that the Federal Government never vetted Musk for organized crime connections to Putin, Mogilevich and Abramovich.

The Clinton Administration, including the DOJ and the Defense Department, was a mess during its last two years.

And the subsequent G.W. Bush Administration was dealing with 9/11 for most of its existence.

Trump’s 2024 campaign over the past three weeks looks more like a campaign for the Trump/Musk ticket, or even the Musk/Trump ticket than the Trump/Vance ticket.

And that’s not a surprise since Vance is nothing more than a “tool” for Musk, Trump, Peter Thiel and, likely, Bezos, too.

Considering the stakes in this election and beyond, I’m beginning to think Elmo didn’t overpay for Xitter, after all.

You mean his financiers including Saudi prince Alwaleed and Qatar’s sovereign wealth fund.

Point taken. I tend to focus on the villains in front of the curtain, but I need to go deeper.

Promoting false claims and misinformation? Here’s an interesting parallel.

Quote from a recently filed lawsuit by a mother whose young son committed suicide after extensive interactions with an AI system. The mother is suing the system developers.

“AI developers intentionally design and develop generative AI systems with anthropomorphic qualities to obfuscate between fiction and reality. To gain a competitive foothold in the market, these developers rapidly began launching their systems without adequate safety features, and with knowledge of potential dangers. These defective and/or inherently dangerous products trick customers into handing over their most private thoughts and feelings and are targeted at the most vulnerable members of society — our children.”

(Megan Garcia, et al. v. Character Technologies Inc., et al., No. 24-1903, M.D. Fla.)

To me, that’s Trump: Obfuscate between fiction and reality, without adequate safety features, with knowledge of potential dangers, tricking people into doing things they shouldn’t, and targeting vulnerable members of our society.

Boycott: In 1978 through boycott (learned from Cesar Chavez, Martin Luther King, Gandhi) of Anita Bryant Tropicana orange juice & others, the Gay community won against HUGE at the time odds of, prop 6 which would have fired all Gay teachers here in California. We all thought the movement was over and worse. Lawyers, Doctors, on down the line would be next. AIDS showed up not long after. Think about it. Every time a link takes me to Xitter, I immediately click back. This isn’t rocket science. It’s called feeding the monster. WE can do better. I don’t do guilt. Just info.

TFG said yesterday from The Guardian news link: “She’s a radical war hawk,” he said of Liz Cheney. Then said: “Let’s put her with a rifle standing there with nine barrels shooting at her. Let’s see how she feels about it. You know, when the guns are trained on her face.” I’m so stunned I’m speechless. Does the nine barrels connote government or tyrant sanctioned?

Tangentially on-topic, re election f*ckery. Totally legit!:

Yup. Got my eye on that asshole. Gotta track the Trump people charged for cheating.

Precious. About as smart an admission as any made by Stephen Collins. If true, this property manager should be facing multiple sets of charges for violating mail laws as well as for voter fraud.

And bonus points for it happening in a state that will be called a minute after the polls close. Which means either turbo-moron or tool for some idiotic bound for failure attempt at claiming California’s elections were compromised and stalling certification

Ah, but you see, he clearly represents the great rural (non)state of Jefferson, which spans much of upper northern CA and eastern Oregon.

Yes, Redding is the seat of Shasta County, which is dominated by batshit crazies.

Prop 33 may have had something to do about his desire to vote multiple times.

New term on the hustings:

The ‘Nauseously Optimistic’ Kamala Campaign

Link: https://nymag.com/intelligencer/article/kamala-harris-campaign-early-voting-battleground-states.html

I hate to do this (especially when you, punaise, brought it up!), but “nauseous” doesn’t mean what the aphorizer wants it to, even though it sounds pretty great.

Granted, “Nauseatedly Optimistic” just doesn’t have the same ring. Or any ring at all.

punaise

November 1, 2024 at 4:07 pm

Ughh. As someone who has had the…privilege of driving through there frequently on my way to Oregon, if I never see those three x’s again it will still be too soon.

There’s normal Northern California (Bay Area and up as far as … Mendocino / Chico?) the there’s extreme Northern California.

re: Participate on X, I figure any post is an offering of fodder for misuse by propagandists and abusers, not to mention provides data for Xitter. Posting facts, logic, reasonable propositions there is an act of infowarfare, so one better be prepared to fight. I’m grateful for any knight shining light like Emptywheel.

Hasn’t Twitter/X been operating in violation of it’s consent decrees since before Musk took it over?

It has to be operating in violation of those decrees since he sacked all those people in charge of corporate compliance around the same time he walked into the headquarters with a toilet.

I believe there was great reluctance to shut it down at the time because of how important the platform was globally.

With those violations (not to mention definite failures to comply with European privacy laws) couldn’t the US legally shut down X?

Asking because I work for a software company and have to take multiple security and government compliance and anti-corruption trainings every year, but I am not a lawyer.

It’s not just Elmo disseminating disinformation, many right-leaning polls are stating Trump has the election in the bag. This is false information only so that Trump can point to these “polls” when he loses next week and files lawsuits for “voter fraud,” while riling-up his MAGA bros to “fight” for his “legitimate return” to the WH that was “stolen from him.”

https://dnyuz.com/2024/10/31/why-the-right-thinks-trump-is-running-away-with-the-race/

https://www.theguardian.com/us-news/2024/nov/01/republicans-donald-trump-polls-us-election-lawsuits

This may be the “little secret” that Trump referenced several times to Speaker Mike Johnson who was sitting in the audience last Sunday night at Madison Square Garden.

“‘I think with our little secret we’re going to do really well with the House, right?” he said, with an air of slyness to himself. “Our little secret is having a big impact. He and I have a little secret—we will tell you what it is when the race is over.” Johnson stirred the pot the following day by confirming some sort of clandestine understanding between the two men. “By definition, a secret is not to be shared—and I don’t intend to share this one,” he said in a statement.'”

https://www.msn.com/en-us/news/politics/ar-AA1tkasL

Musk is all in for Trump and Putin, he is not a use full idiot. He is totally complicit, and wants a seat at the dictator’s table.

great post. thanks, marcy.

“And then, shortly thereafter, the idea was born for the richest man in the world to buy Twitter.”

I wonder about this idea’s conception. Just one megalomaniacal father or were there others?

Even if he (anti)woke up one night with a Eureka! moment there must have been many discussions with others post-partum about its value, financially and otherwise, how much to pay, raising the finance. Eventually it was decided to pay twice as much as Twitter was then worth rather than pay the relatively small penalty and walk away.

I decided Musk was a nasty piece of work after his petulant and cruel reaction when he didn’t get his way in the Thai cave rescue but his statement that civil war in the UK is inevitable showed Trumpian levels of malicious invention, astounding ignorance or both. We haven’t had one for more than 370 years and there’s no possibility of one now.

Now we learn that both he and Trump (and probably Vance’s master Thiel) have been talking privately to Putin, I wonder just who had a hand in and directed this.

ps.

“Russian spies (except Tommy Robinson, …”

The use of a dash then a ( had me thinking of *that* nasty piece of work in a new light for a few puzzled moments.