Matt Taibbi Is Furious that Election Integrity Project Documented How Big Trump’s Big Lie Was

As you’ve no doubt heard, #MattyDickPics Taibbi went on Mehdi Hasan’s show yesterday and got called out for his false claims.

“Well, that, then, is an error.”

– @mtaibbi, confronted with previously unacknowledged mistakes in his Twitter Files reporting, by @mehdirhasan.Watch the full conversation later tonight: https://t.co/WI2GDlekQP pic.twitter.com/iYM1n7xOaN

— The Mehdi Hasan Show (@MehdiHasanShow) April 6, 2023

After the exchange, #MattyDickPics made a show of “correcting” some of his false claims, which in fact consisted of repeating the false claims while taking out the proof, previously included in the same tweet, that he had misquoted a screen cap to sustain his previous false claim.

#MattyDickPics made a mishmash of these same claims in his sworn testimony before Jim Jordan’s committee, which may be why he doesn’t want to make wholesale corrections. I look forward to him correcting the record on false claims made under oath.

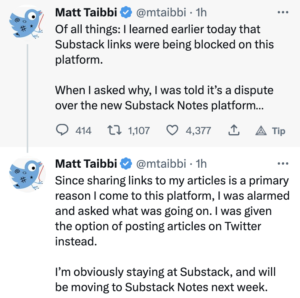

#MattyDickPics also wrote a petulant post announcing that MSNBC sucks, in which, after a bunch of garbage that repeatedly cites Jeff Gerth as a factual source (!!!), finally gets around to admitting how sad he is that no one liked his Twitter Files thread making claims about the FBI.

After the first thread, Mehdi was one of 27 media figures to complain in virtually identical language: “Imagine volunteering to do PR work for the world’s richest man.”

I laughed about that, but couldn’t believe the reaction after Twitter Files #6, showing how Twitter communicated with the FBI and DHS through a “partner support channel,” and in response to state requests actioned accounts on both sides of the political aisle for harmless jokes. Mehdi’s take wasn’t that this information was wrong, or not newsworthy, but that it shouldn’t have been published because Elon Musk put Keith Olbermann in timeout for a day, or something. “Even Bari Weiss called him out, but Taibbi seems to want to tweet through it,” Mehdi tweeted.

If it sounds like my beef with MSNBC is personal, by now it is. Take the Twitter Files. When first presented with the opportunity to do that story, my first reaction was to be extremely excited, as any reporter would be, including anyone at MSNBC. In the next second however I was terrified, because I care about my job, and knew there would be a million eyes on this thing and a long way down if I got anything wrong. If you’ve ever wondered why I look 100 years old at 53 it’s because I embrace this part of the process. Audiences have a right to demand reporters lie awake nights in panic, and every good one I’ve ever met does.

But people who used to be my friends at MSNBC embraced a different model, leading to one of the biggest train wrecks in the history of our business. Now they have the stones to point at me with this “What happened to you?” routine. It’s rare that the following words are justified on every level, but really, MSNBC: Fuck you.

As I showed, #MattyDickPics made a number of egregiously false claims in that very same Twitter Files #6, the very same one he’s stewing over because it wasn’t embraced warmly.

But one of the other key false claims Mehdi caught #MattyDickPics making is far more important: the claim that the Election Integrity Project “censored” 22 million tweets; in his tweet, he claimed that “According to EIP’s own data, it succeeded in getting nearly 22 million tweets labeled in the runup to the 2020 vote.”

After Mehdi posted the appearance, #MattyDickPics “removed” his error.

Then, after a guy named Mike Benz, who is at the center of this misinformation project, misinformed him, #MattyDickPics reverted to his original false claim.

As to the factual dispute, there is none. #MattyDickPics and his Elmo-whisperer Mike Benz are wrong. The error stems from either an inability to read an academic methodology statement or the ethic among these screencap boys that says you can make any claim you want so long as you have a screencap with a key word in it.

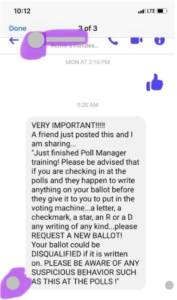

At issue is a report the Election Integrity Project released in 2021 describing their two-phase intervention in the 2020 election. The first phase consisted of ticketing mis- or disinformation in real time in an attempt to stave off confusion about the election. Here’s the example of real-time ticketing they include in their report.

To illustrate the scope of collaboration types discussed above, the following case study documents the value derived from the multistakeholder model that the EIP facilitated. On October 13, 2020, a civil society partner submitted a tip via their submission portal about well-intentioned but misleading information in a Facebook post. The post contained a screenshot (See Figure 1.4).

Figure 1.4: Image included in a tip from a civil society partner.

In their comments, the partner stated, “In some states, a mark is intended to denote a follow-up: this advice does not apply to every locality, and may confuse people. A local board of elections has responded, but the meme is being copy/pasted all over Facebook from various sources.” A Tier 1 analyst investigated the report, answering a set of standardized research questions, archiving the content, and appending their findings to the ticket. The analyst identified that the text content of the message had been copied and pasted verbatim by other users and on other platforms. The Tier 1 analyst routed the ticket to Tier 2, where the advanced analyst tagged the platform partners Facebook and Twitter, so that these teams were aware of the content and could independently evaluate the post against their policies. Recognizing the potential for this narrative to spread to multiple jurisdictions, the manager added in the CIS partner as well to provide visibility on this growing narrative and share the information on spread with their election official partners. The manager then routed the ticket to ongoing monitoring. A Tier 1 analyst tracked the ticket until all platform partners had responded, and then closed the ticket as resolved.

It wasn’t a matter of policing speech. It was a matter of trying to short circuit even well-meaning rumors before they start going viral.

According to the report, social media companies acted on 35% of the identified tickets, most often those claiming victory before the election had been called. Just 13% of all those items ticketed were removed.

35% of the URLs we shared with Facebook, Instagram, Twitter, TikTok, and YouTube were either labeled, removed, or soft blocked. Platforms were most likely to take action on content that involved premature claims of victory.

[snip]

We find, overall, that platforms took action on 35% of URLs that we reported to them. 21% of URLs were labeled, 13% were removed, and 1% were soft blocked. No action was taken on 65%. TikTok had the highest action rate: actioning (in their case, their only action was removing) 64% of URLs that the EIP reported to their team.

Then after the election, EIP looked back and pulled together all the election-related content to see what kinds of mis- and disinformation had been spread, including after the election. Starting in Chapter 3, the report describes the waves of mis- and disinformation they identified, starting with claims about mail-in voting, to claims about how the votes would be counted, to organized efforts to “Stop the Steal” that resulted in the January 6 attack. It looked at a number of case studies, including Stop the Steal, the false claims about Dominion that have already been granted a partial summary judgment in their Fox lawsuit, and nation-state campaigns including the Iranian one that involved posing as Proud Boys to threaten Democratic voters that #MattyDickPics has systematically ignored.

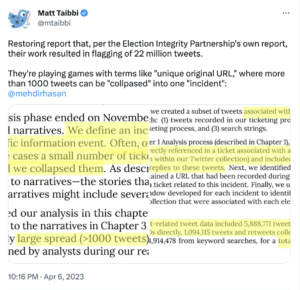

Chapter 5 describes the historic review that #MattyDickPics misrepresented. It clearly describes that this analysis was done after the fact, starting only after November 30.

Through our live ticketing process, analysts identified social media posts and other web-based content related to each ticket, capturing original URLs (as well as screenshots and URLs to archived content). In total, the EIP processed 639 unique tickets and recorded 4,784 unique original URLs. After our real-time analysis phase ended on November 30, 2020, we grouped tickets into incidents and narratives. We define an incident as an information cascade related to a specific information event. Often, one incident is equivalent to one ticket, but in some cases a small number of tickets mapped to the same information cascade, and we collapsed them. As described in Chapter 3, incidents were then mapped to narratives—the stories that develop around these incidents—where some narratives might include several different incidents. [my emphasis]

Then it describes how it collected a bunch of data for this historic review. One of three sources of data used in this historic review was Twitter’s API (the other two were original tickets and data from Facebook and Instagram). Starting from a dataset of 859 million tweets pertaining to the election, EIP pulled out nearly 22 million tweets that involved “election incidents” of previously identified mis- or disinformation.

We collected data from Twitter in real time from August 15 through December 12, 2020.1 Using the Twitter Streaming API, we tracked a variety of election-related terms (e.g., vote, voting, voter, election, election2020, ballots), terms related to voter fraud claims (e.g., fraud, voterfraud), location terms for battleground states and potentially newsworthy areas (e.g., Detroit, Maricopa), and emergent hashtags (e.g., #stopthesteal, #sharpiegate). The collection resulted in 859 million total tweets.

From this database, we created a subset of tweets associated with each incident, using three methods: (1) tweets recorded in our ticketing process, (2) URLs recorded in our ticketing process, and (3) search strings.

Relying upon our Tier 1 Analysis process (described in Chapter 1), we began with tweets that were directly referenced in a ticket associated with an incident. We also identified (from within our Twitter collection) and included any retweets, quote tweets, and replies to these tweets. Next, we identified tweets in our collection that contained a URL that had been recorded during Tier 1 Analysis as associated with a ticket related to this incident. Finally, we used the search string and time window developed for each incident to identify tweets from within our larger collection that were associated with each election integrity incident.

In total, our incident-related tweet data included 5,888,771 tweets and retweets from ticket status IDs directly, 1,094,115 tweets and retweets collected first from ticket URLs, and 14,914,478 from keyword searches, for a total of 21,897,364 tweets.

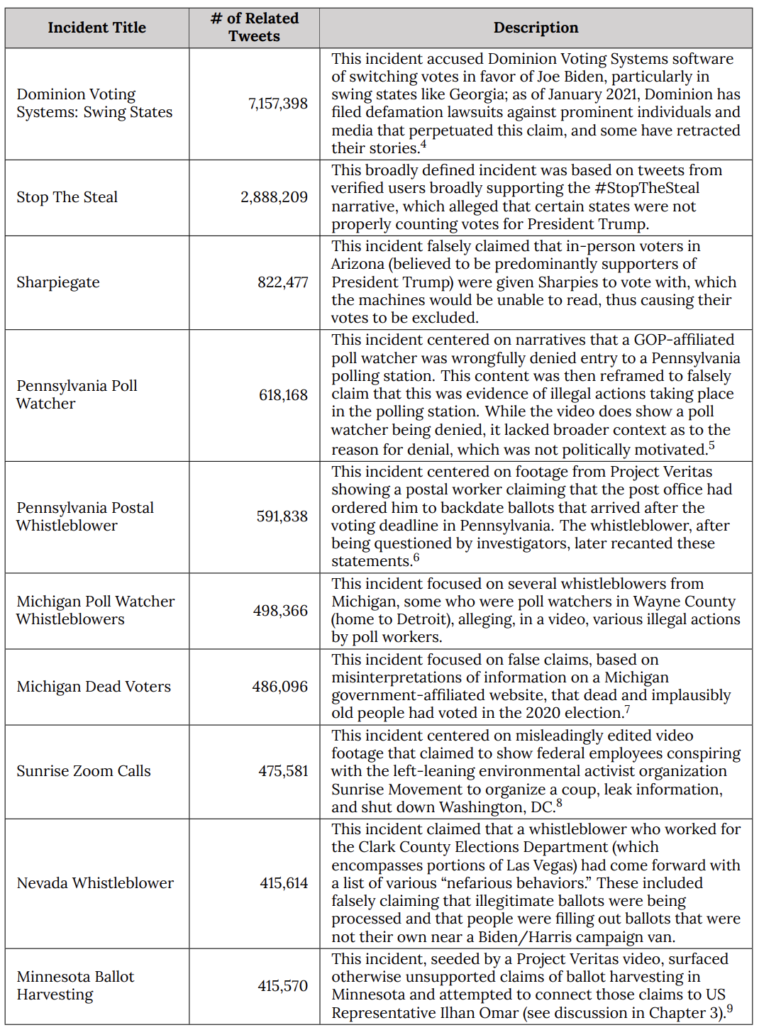

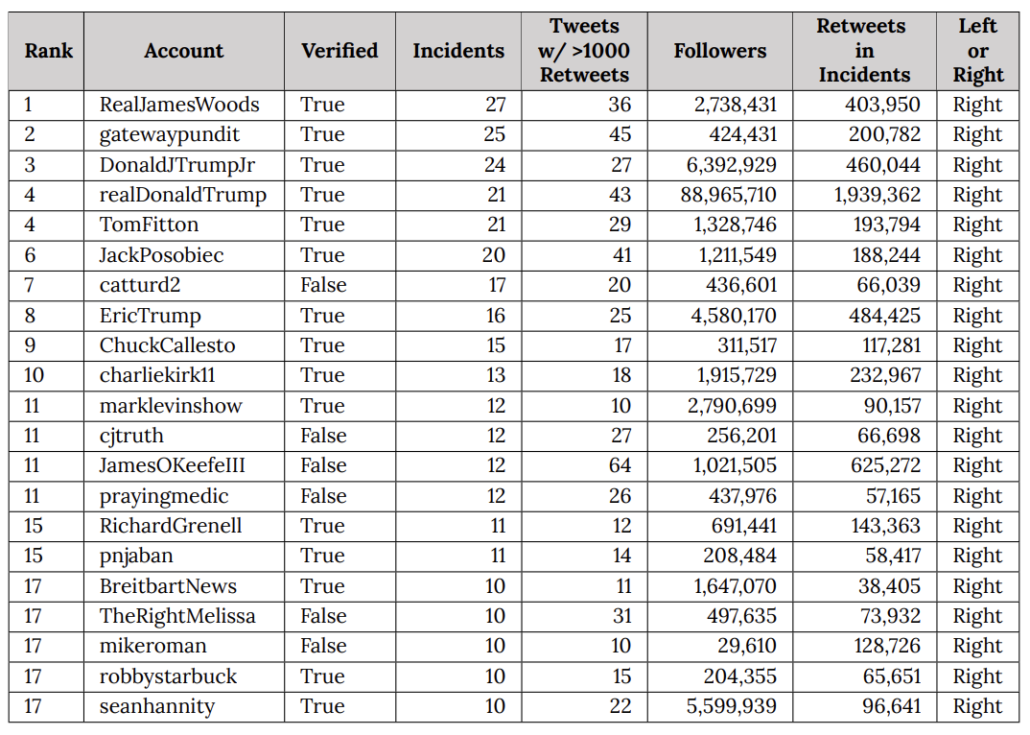

Here’s the EIP table of its top-10 most viral examples of mis- or disinformation, amounting to over 14 million of the tweets in question. Right away, it should alert you to the effect, if not the goal, of conflating EIP’s real-time tickets to social media companies, including of things like an overgeneral statement about how ballots are treated in different states, with what EIP found in their historical review of how mis- and disinformation worked in 2020.

What #MattyDickPics and his Elmo whisperer Mike Benz are complaining about is not that EIP attempted to “censor” speech in real time. What they’re complaining about is that a bunch of academics and other experts figured out what the scale and scope of mis- and disinformation was in 2020. And what those experts showed is that systematic Republican disinformation (and mind you, this is just the disinformation through December 12; it missed the bulk of the build-up to January 6) made up the vast majority of mis- and disinformation that went viral in 2020. It showed that, even by December 12, almost 45% of the mis- and disinformation on Twitter consisted of two campaigns tied to Trump’s Big Lie, the attacks on Dominion and the organized Stop the Steal campaign.

EIP’s list of repeat spreaders is still more instructive, particularly when you compare it against the list of people that Elmo has welcomed back to Twitter since he took over.

What EIP did was catalog how central disinformation from Trump and his family — and that of close allies in the insurrection — was in the entire universe of mis- and disinformation (Mike Roman, one of least known people on this list, had his phone seized as part of the January 6 investigation last year).

Some mis- and disinformation did not go viral in 2020. What did, overwhelmingly, was that which Trump and his allies made sure to promote.

The dataset of 22 million tweets is not a measure of mis- or disinformation identified in real time. What it is, though, is a measure of how central Trump is to disinformation on social media.

Whether #MattyDickPics understands the effect of the stubborn false claim that Mike Benz fed him, whether #MattyDickPics understands how his false claim provides Elmo cover to replatform outright white supremacists, or not, the effect is clear.

The concerted effort to discredit the Election Integrity Project has little effect on flagging mis- or disinformation in real time. What it does, however, is discredit efforts to track just how central Trump is to election disinformation in the US.

Update: Here’s the full Mehdi Hasan interview.

Update! Oh no!! Drama!!